This is an elaboration of a post I made in a Swift Forums thread, SE-0419: Swift Backtracing API.

The question was raised whether an official Swift backtracer should try to support code that doesn’t use frame pointers. Which immediately raised the question – in my mind – of if anyone is still using the “optimisation” of omitting frame pointers, anyway. And perhaps more importantly, whether they should still be omitting frame pointers.

What is a frame pointer?

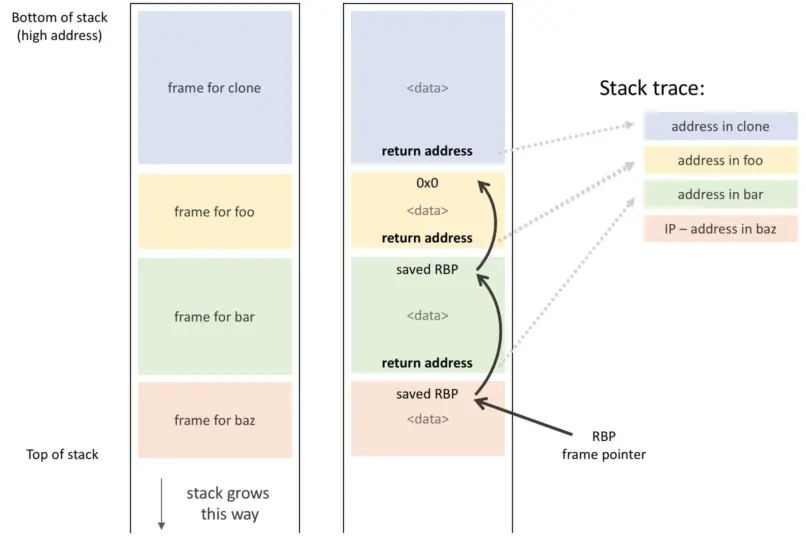

A pointer to a stack frame, held in a well-known location. That location can be in the stack itself (forming a linked-list of the stack frames) or in registers (e.g. the x29 register on AArch64, or RBP register on x86-64).

The controversial part – insofar as there is any controversy – is in dedicating a CPU register to hold a frame pointer (to point to the start of the current stack frame). It’s super convenient for a lot of things, but particularly for debuggers and profilers as it gives them a reliable and very fast way to find the top of the current callstack. But it’s not technically required for the program to function.

No live CPU architectures, that I’m aware of, have a dedicated hardware register for frame pointers. So you nominally have to “give up” a GPR (general-purpose register) in order to have a frame pointer.

Franz Busch pointed out that some notable software still ships with frame pointers omitted, e.g. apparently some major Linux distros. I suspect it’s merely some inertia (or simply oversight) that’s delaying getting people off of that old crutch. I’m not remotely surprised that some big Linux distros are in this bucket – they tend to be absurdly conservative and slow to change1. And it’s mind-boggling how much vitriol restoring frame pointers generates from the peanut gallery.

From watches to servers these days – and frankly most of the embedded space, since it’s mostly ARM – everything generally has an ISA with sufficiently many GPRs to negate any big benefit from omitting frame pointers. Giving up one of 31 GPRs (for e.g. AArch64, the dominant CPU architecture family today) is pretty insignificant for the vast majority of code, because almost nothing actually uses all 31 GPRs anyway. It only makes a significant difference2 when the CPU design is register-starved to begin with, like i386. And those architectures are largely dead, in museums, or restricted to very tiny CPUs as used in some microcontrollers (“embedded” systems).

Even back when i386 et al were still a concern, the proponents of -fomit-frame-pointer often argued not on the potential merits of the trade-off, but rather that it was a “free” performance boost, so even if it was only by a percentage point or two, why not? They of course were either naively or deliberately overlooking the detrimental effects.

There may still be software for which omitting frame pointers is the right trade-off, even on modern CPUs. But I find it hard to believe there’s enough cases like that to warrant accomodation in standard tools.

A brief trip back to Apple circa 2007

Back in the brief window of time when i386 was a thing for the Mac (32-bit Intel, e.g. Core Duos3 as used in the first MacBooks), I was at Apple in the Performance Tools teams (Shark & Instruments), and it was a frustration of ours that -fomit-frame-pointer was a noticeable performance-booster on the register-starved i3864 architecture5, so it was hard to just bluntly tell people not to use it… yet, by breaking the ability to profile their code, people who used it often left even bigger performance gains on the table (or otherwise had to invest much more labour into identifying & resolving performance problems).

At one point there was even an Apple-internal debate about whether to abandon kernel-based profiling in favour of user-space profiling6 because implementing backtracing without frame pointers is possible but very expensive and requires masses of debug metadata (e.g. DWARF), making it highly unpalatable to put in the kernel. Thankfully there were too many obvious problems with user-space profiling, so that notion never really got its legs, and then x86-64 finally arrived7 and it was mooted.

- e.g. Ubuntu still not officially supporting ImageMagick 7 even though it’s been out for nearly a decade. ↩︎

- Aside from the question of register space, there is additional cost to implementing frame pointers, as additional instructions are required around function entry & exit in order to maintain the frame pointers – to push & pop them off the stack, etc. The cost of those is usually insignificant – especially in superscalar microarchitectures, as is the norm – so that aspect is not typically the focus of the controversy. ↩︎

- Tangentially, I vaguely recall us Apple engineers kinda hating the Core Duo (Yonah), or more specifically Apple’s choice to use it. Apple used them only for a tiny window of time, from May 2006 to about November 2006 when the Core 2 Duo (Merom) finally replaced them across the line. I don’t recall all the reasons that the Core 2 Duo was superior, but they included that Core 2 Duo corrected the 32-bit regression (for Macs) and performed much better. Anytime Apple releases a Mac with a dud processor in it, like those Core Duos, a lot of Apple engineers die a little inside because they know they’re going to be stuck supporting the damn things for many years even after the last cursed one rolls off the assembly line.

It’s still a mystery to me why Apple rushed the Intel transition in this regard. They only had to wait six more months and they could have had a clean start on Intel, with no 32-bit to burden on them for the next seven years. ↩︎ - Why do I keep calling it “i386”? Isn’t it officially “IA-32”? Well, yes, but that’s (a) only retroactively and (b) only ever used by Intel. Though I guess “x86” is probably the more common name? Yet “i386” is in my mental muscle memory. Maybe that’s just how we used to refer to it, at Apple? Maybe just because that’s the name used in gcc / clang arch & target flags?

Incidentally,clang -arch i386 -print-supported-cpuson my M2 MacBook Air still lists Yonah (those damn Core Duos) as supported. Gah! They won’t die! 😆 ↩︎ - It’s funny how the Intel transition is now heralded as being amazing and how much better Intel Macs were than PPC Macs, but for a while there we lost a lot of things, like a 64-bit architecture, an excellent SIMD implementation, and the notion of more than [effectively] six GPRs.

↩︎

↩︎ - There were at the time already some Apple developer tools that did user-space profiling, most notably Sampler (now a niche feature in Activity Monitor) and early versions of Instruments (in fact Instruments still has the Sampler plug-in which does this, although I can’t really fathom why anyone would ever intentionally use it over the Time Profiler plug-in). ↩︎

- In the sense of all Macs adopting it, not just the Mac Pro. It was easy to ignore i386 at that point because it was then all but officially a dead architecture as far as Apple were concerned. ↩︎