Contents

Swift’s Collection and Sequence protocols provide two primary ways to enumerate (filter, map, reduce, etc): functional-style and imperatively. For example:

let result = data

.filter { 0 != $0 }

.map { $0 * $0 }

.reduce(into: 0, &+=)Or:

var result = 0

for value in data {

if 0 != value {

result &+= value * value

}

}Nominally these are equivalent – they’ll produce the same results for all correctly-implemented Collections and Sequences. So in principle which you use is purely a matter of stylistic preference.

But is it?

Do they actually perform equivalently?

Let’s examine an example that’s a little more involved than the above snippets, but still fundamentally pretty straightforward. The extra processing steps are to help distinguish any performance differences.

The pertinent parts are:

testData.next

.filter { 0 != $0 }

.map { $0.byteSwapped }

.filter { ($0 & 0xff00) >> 8 < $0 & 0xff }

.map { $0.leadingZeroBitCount }

.filter { Int.bitWidth - 8 >= $0 }

.reduce(into: 0, &+=))And the imperative equivalent:

var result = 0

for value in testData.next {

if 0 != value {

let value = value.byteSwapped

if (value & 0xff00) >> 8 < value & 0xff {

let value = value.leadingZeroBitCount

if Int.bitWidth - 8 >= value {

result &+= value

}

}

}

}I’ve published the full source code, in case you’d like to review it further or run it yourself.

How does the performance compare?

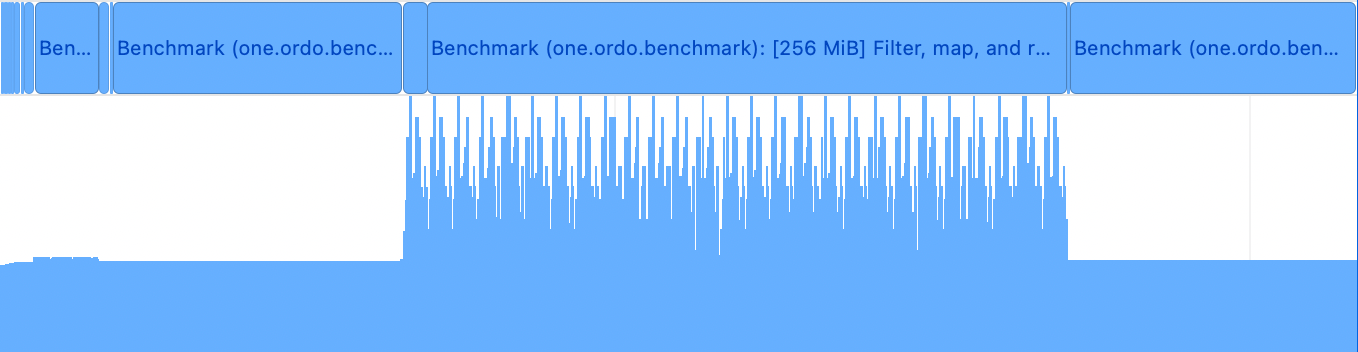

On my iMac Pro (10 cores (Xeon W-2150B)):

| Dataset size | Functional (median) | Imperative (median) | Performance difference |

|---|---|---|---|

| 0 | 234 ns | 133 ns | 1.67x |

| 32 KiB | 57 µs | 16 µs | 3.56x |

| 1 MiB | 1.7 ms | 0.5 ms | 3.36x |

| 8 MiB | 27 ms | 4.2 ms | 6.36x |

| 32 MiB | 147 ms | 17 ms | 8.65x |

On my M2 MacBook Air:

| Dataset size | Functional (median) | Imperative (median) | Performance difference |

|---|---|---|---|

| 0 | 167 ns | 83 ns | 2.01x |

| 32 KiB | 37 µs | 3.6 µs | 10.20x |

| 1 MiB | 1,058µs | 112 µs | 9.45x |

| 8 MiB | 12 ms | 0.9 ms | 13.23x |

| 32 MiB | 50 ms | 3.7 ms | 13.76x |

The imperative version is many times faster! And the performance difference increases as the collection size increases. The functional version starts off not super terrible – at least on the same order magnitude as the imperative version – but it tends rapidly towards being an order of magnitude slower.

Worse, the difference is much more pronounced on more modern CPUs, like Apple Silicon.

What’s going on?

There’s a few compounding factors.

Smaller datasets are more likely to fit into CPU caches (the dataset sizes shown above were chosen to correspond to L1 / L1 / L2 / L3 / RAM, respectively, on my iMac Pro). Working on data in CPU caches is by nature faster – the lower-level the cache the better – and so helps hides inefficiencies.

The functional version creates intermediary Arrays to store the intermediary results of every filter and map operation. This introduces malloc traffic, retains & releases, and writes to memory. The imperative version has none of that overhead – it simply reads every value in the collection once, performing the whole sequence of operations all in one go for each element, using only CPU registers (not so much as a function call, even!).

Sidenote: Dataset load costs

In real-world cases there’s sometimes a substantial baseline cost of reading the dataset in from memory (or disk, or the network), unless your dataset is small enough to fit in caches and was very recently populated there. But even creaky old Intel machines like my iMac Pro have pretty decent memory bandwidth, such that it’s rarely the performance bottleneck unless your algorithm is quite trivial and well-optimised (e.g. to utilise SIMD instructions).

In the benchmarks I somewhat emptied the caches before each run, by alternating between two similar datasets, but this really just means that during each run it has to load in the initial dataset from one further level out (e.g. L2 instead of L1). So these benchmarks aren’t really demonstrating the potential full cost of loading the dataset from RAM – let-alone from disk or the network.

Furthermore, because the functional version is allocating those additional arrays, which take up more space, it tends to overflow caches sooner. For example, in the 1 MiB case, instead of being able to operate entirely out of L2 on the iMac Pro, it has to fall back (at least partially) to L3. L3 is significantly slower – higher latency – than L2, so that makes everything it does noticeably slower. It’s worse when it no longer fits in any CPU caches and has to start going back and forth to RAM, as there’s a big jump in latency between CPU caches and RAM.

Sidenote: Time per element

I didn’t include it in the results table because it’s tangential, but as a quick note: the time per element varies depending on which level of cache the execution fits within.

It’s a pretty consistent 4ns (iMac Pro) / 0.9ns (M2) in the imperative case (memory prefetching is able to keep up with the trivial linear read pattern).

But for the functional version it ranges from 13ns (iMac Pro) / 9ns (M2) for L1, up to 35ns (iMac Pro) / 12ns (M2) for RAM. The memory prefetcher still does an admirable job keeping the performance relatively consistent, but it can’t completely cover up the inefficiencies.

The M2 scales better – suffers less of a performance impact as datasets get larger – because it has both greater memory bandwidth and much lower latency (especially when we get to RAM, as its RAM is in the CPU package rather than miles away across the motherboard on separate DIMMs).

Things get far worse if you exceed available RAM, too. I haven’t shown that in these results – mainly because it’s painfully time-consuming to run such benchmarks on my iMac Pro with 64 GiB of RAM – but suffice to say that once you start swapping, the performance goes completely down the toilet.

So I should avoid the filter & map methods?

For trivially small datasets, the difference might be negligible. Especially if you’re only using the input data once (if you’re reusing it many times over, you might want to look at caching the results anyway, or other such optimisations).

For non-trivial datasets, it is often wise to avoid using filter and map, at least “eagerly”. What does that mean? Well, there are actually two functional styles supported by Collection and Sequence…

Enter lazy…

By default filter, map, and other such operations are “eager” – as soon as they’re executed they enumerate their entire input, generate their entire output, and only then does execution move on to the next operation in the pipeline.

But there is an alternative – lazy versions of all of these. You access them via the lazy property of Collection / Sequence, e.g.:

testData.next

.lazy // New!

.filter { 0 != $0 }

.map { $0.byteSwapped }

.filter { ($0 & 0xff00) >> 8 < $0 & 0xff }

.map { $0.leadingZeroBitCount }

.filter { Int.bitWidth - 8 >= $0 }

.reduce(into: 0, &+=))The lazy property returns a special “lazy” view of the underlying Collection or Sequence. That view looks a lot like the original object – it has the same map, filter, etc methods – but its version of those methods return further lazy views, rather than the actual results of the operation. It doesn’t actually perform the operation until it’s strictly necessary. And when it is necessary – such as when some code like reduce enumerates the results to produce a concrete value – it calculates the results on the fly, with no intermediary storage.

So, that all sounds good – should be faster, right? Let’s see.

On my iMac Pro:

| Dataset size | Lazy functional (median) | Imperative (median) | Performance difference |

|---|---|---|---|

| 0 | 155 ns | 133 ns | 1.17x |

| 32 KiB | 15 µs | 16 µs | 0.94x |

| 1 MiB | 491 µs | 511 µs | 0.96x |

| 8 MiB | 4.1 ms | 4.2 ms | 0.98x |

| 32 MiB | 16 ms | 17 ms | 0.94x |

A dramatic difference versus the eager style. The lazy functional style is still slightly slower than the imperative style for very small collections, such as the empty one here, but it’s actually slightly faster for most!

The Swift compiler is doing a pretty amazing job in this case. Nominally it still needs to do a bunch of overhead – each of those lazy filter and map methods returns a lazy view object, and those objects form a logical chain, and have to call various methods on each other in order to pass data through the pipeline. Indeed, in debug builds that’s exactly what you see in the compiled binary, and the performance is much worse. But with the optimiser engaged, the compiler sees through all that boilerplate and eliminates it, reducing the whole thing down to a very efficient form.

Wait, faster…?

That it’s actually a tad faster is odd as in principle they should be almost identical – any optimisation the compiler can apply to the functional version should also be applicable to the imperative one, since the imperative one is basically an easier subcase where we’ve manually done the hard optimisations already.

Indeed looking at the machine code that the Swift compiler emits, they are very similar. It’s unclear to me why there’s a reliable, measurable performance difference between them – perhaps an accidental consequence of slightly different instruction orderings and register selection.

However, on my M2 MacBook Air:

| Dataset size | Lazy functional (median) | Imperative (median) | Performance difference |

|---|---|---|---|

| 0 | 83 ns | 83 ns | 1.00x |

| 32 KiB | 12 µs | 3.6 µs | 3.31x |

| 1 MiB | 442 µs | 112 µs | 3.95x |

| 8 MiB | 3.6 ms | 0.9 ms | 3.94x |

| 32 MiB | 14 ms | 3.6 ms | 3.85x |

Oh no – while the lazy functional version is several times faster than the eager functional version, it’s still many times slower than the imperative version (for non-empty collections).

It’s not entirely clear to me why this is the case; why the behaviour is so different to x86-64. Looking at the machine code, the compiler’s optimiser has still successfully removed all the boilerplate and simplified it down to a tight loop of trivial integer operations. It appears the difference might arise from the use of conditional instructions (for the functional version) versus branching (for the imperative version). It’s not clear why the compiler uses different approaches for what are otherwise very similar blocks of code. As such, the behaviour might change between compiler versions (this exploration used Swift 5.9) or cases (variations in code structure – or details – might cause the compiler to make different instruction selections).

Alas, explicable or not, the conclusion is clear:

Lazy functional-style performs better than eager functional-style, but still much worse than imperative style.

So my advice is to generally avoid the functional style. Not religiously, but with moderate determination.

The only clear exception, where it’s okay to use the functional style, is if you’re inherently doing single operations at a time, like a simple map where you actually need to store the resulting Array. You’ll get no benefit from using lazy in such cases, nor will the imperative version be meaningfully faster (usually).

Preview: Lazy considered harmful

Unfortunately, in addition to still being slower than the imperative style on modern CPUs, there’s several further aspects of lazy Collections and Sequences that are problematic. I plan to dive deeper into this in a follow-up post, but here’s a teaser:

- In debug builds the performance is poor, because the optimiser essentially isn’t used. Thus debugging (e.g. the regular

Runaction in Xcode) and unit testing may be slowed down significantly. - The optimisations only work if the compiler can see your whole pipeline. If you start splitting things up in your code, or making things dynamic at runtime, the compiler might become unable to make the necessary optimisations. In general if you start storing lazy

Collections /Sequences anywhere, or returning them from properties or methods, you’re likely to miss out on the optimisations. - There are some serious pitfalls and sharp edges around lazy

Collections andSequences which can lead to them being not just slower than their eager brethren, but potentially dangerous (in the sense of not producing the expected results)! Stay tuned for details.

Hi, were these metrics captured using a Debug or Release build configuration? And did you test with iOS devices, or would you expect similar performance metrics as your M2 tests?

Release.

I did not test on any iDevices. I expect they’d perform very similarly, since they share most of their core architecture with the Mac CPUs.

Also, it was a Release configuration, as the benchmarking infrastructure used only builds such.