Reading SwiftData vs Realm: Performance Comparison reminded me of an anecdote from my days working on Shark, at Apple.

I don’t really remember the timing – sometime between 2006 and 2010 – but presumably around 2006 as I recall it was when Core Data was still relatively new. For whatever reason, there was a huge push internal to Apple to use Core Data everywhere. People were running around all over the place asking “can it be made to use Core Data?”, for Apple’s frameworks and applications.

Keep in mind that Core Data at that time was similar to SwiftData now – very limited functionality, and chock full of bugs. But of course it’s the nature of ‘shiny’ new things that their proponents think it’s the second coming and the cure for all ills.

So, I recall sitting down with a couple of folks from the Core Data team, that were there to see if Shark could adopt Core Data. A little like letting the missionaries in, if only out of morbid curiosity.

Have you heard the good news? Core Data is here to save your very data. It’s effortless and divine and its unintuitive, thread-unsafe API will definitely not be the bane of all its users for the next fifteen years.

Jokes aside, they were in fact earnestly curious if Shark could use Core Data, instead of its own purpose-built binary formats, for storing & querying its profiling data. It was perhaps the classic case of naively underestimating the complexity of a foreign domain. By my recollection, they assumed our profiling data was just a small handful of homogenous, relatively trivial records. “At second N, the program ran the function named XYZ” or somesuch.

I think we (Shark engineers) tried to be open-minded and kind. We were sceptical, but you never know until you actually look. We could see some potential for a more general query capability, for example. But of course the first and most obvious hurdle was: how well does Core Data handle sizeable numbers of records? Oh yes, was the response, it’s great even with tens of thousands of records.

We asked how it did with tens of millions of records, and that was pretty much the end of the conversation.

Background on the Time Profile data structure

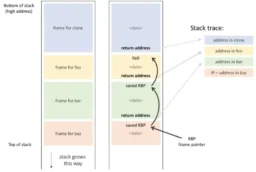

For context, the data in a Shark Time Profile (for example) was basically an array of samples, where each sample records the process & thread IDs, and the callstack (expressed as an array of pointer-sized values; the first being the current PC and the rest the return addresses found by walking back up the thread’s stack).

Callstacks back then were relatively small, by modern standards – this was predominately C/C++/Objective-C code which tended to be far simpler in its structure than e.g. Swift; way fewer closures (blocks), no async suspension points to split logical functions up into numerous implementation functions, etc. So the average was probably something in the low tens. A hundred frames was considered a big callstack (which is sadly funny in hindsight, given that’s trivial by e.g. SwiftUI’s standards 😒).

A useful profile had at least thousands of such samples, and typical profiles were in the tens to hundreds of thousands (the latter usually for All Threads States profiles, particularly those of the whole system). Some profiles could run into the millions or tens of millions (it’s not always easy or predictable as to when a performance problem will exhibit itself, so recording sometimes had to start early and run long).

I’m pretty sure Shark used NSCoding for the overall serdes, but a lot of that serdes was of huge chunks of (as far as NSCoding was concerned) arbitrary bytes. The file format was overall fairly efficient (though I don’t recall it ever using explicit data compression, nor even delta encoding for callstacks).

It wasn’t just the volume of data, it was also the dramatic difference in representation efficiency. The in-memory representation in Shark was basically as efficient as it could be – basically just arrays of compact structs, sometimes with pointers to other arrays (which might share a malloc block to avoid the overhead of small allocations) which were usually just of uint32_t or uint64_t. The most important operations – indexing to an arbitrary point in the profile’s timeline, then scanning forward over the data – were about as fast as they can possibly be.

In contrast, Core Data would have required an entire object (NSManagedObject subclass) for at least every sample, if not every uintXX_t in the callstack (depending on how ‘pure’ you wanted the design to be). It would have increased memory usage by at least an order of magnitude – and Shark already struggled with big profiles on the hardware of the day, which typically had just a couple of GiB of RAM. Even the most trivial operations – like reading the data in from disk and iterating it sequentially would have been thousands of times slower.

In defence of the Core Data folks in the meeting – and I don’t remember who specifically it was – they never tried to misrepresent or exaggerate what Core Data could do. I seem to recall them being quite nice people. But as soon as we started explaining the type and volume of data that we worked with, they clearly gave up on any kind of pitch. Core Data was designed for developer convenience, not runtime efficiency or performance.

It’s never ceased to surprise and disappoint me how many folks try to arbitrarily apply generalised data storage systems – particularly SQLite and MySQL, or wrappers thereover. Usually for the same reasons – perceived convenience to them, right now, not necessarily efficiency (nor the convenience of their successors).

I guess by modern standards SQLite is considered efficient and fast, but – hah – back in my day SQLite was what you used when you didn’t have time to write your own, efficient and fast persistent data management system.

See also JSON and its older sister XML. 😔

@everything Oh man you worked on Shark!! I was a heavy Shark *user* at Apple around that same time!

Remote Reply

Original Comment URL

Your Profile

To be clear, though, I worked on Shark for only a couple of years, and my contributions were modest. I worked in particular on GraphKit, the charting framework that Shark (among other Apple applications) used. And CoreProfile, the framework that was built to support Shark 5 but ultimately only ever got used by Instruments.

@everything Get out of here, I used GraphKit for a custom internal tool

Remote Reply

Original Comment URL

Your Profile

Hah, that wasn’t tLog or whatever it was called, was it? I recall that Randy Overbeck used it a lot and it was one of the primary drivers of GraphKit being optimised to support hundreds of millions of data points on a single chart (it’s amazing what some clever rasteriser-aware data pre-processing, and the CoreGraphics APIs, can do).

I was pretty proud of that, I must admit. To this day I’m not aware of any charting library that is as performant while still producing technically correct results (meaning not just plot element placement but down to line joins and end caps).

Though of course that was all CPU-based. Presumably now it could be accelerated by GPUs. I vaguely recall exploring that a bit, way back then, but OpenGL wasn’t going to make my life easy if I went that route.