I just read up a bit on io_uring, prompted by a Swift Forums thread relating to it, and it made me laugh. To a lot of people it’s an amazing new[ish] high-performance I/O system for Linux. Which it is (albeit with some serious security concerns, apparently). A lot of people are very excited by it. Which they should be. But it’s not new or novel, despite what many folks seem to think.

It immediately reminded me of the Shark 5 re-implementation of the profiling interface between the kernel and Shark. Shark 5 of course didn’t actually survive to birth – it was snuffed out by politics and, admittedly, a bit of our own hubris in the Shark team – but that underlying infrastructure did, as the guts of Instruments‘ Time Profiler and System Trace features.

I’m not even pretending to claim that what we came up with for Shark 5 was novel, either. As far as we know it was novel to our domain, of profiling tools, but I’d be amazed if there aren’t earlier implementations of the same sort of thing many decades prior.

In Shark 4 and earlier, userspace would allocate a single big buffer of memory for the profiling data, hand it [back] to the kernel1, the kernel – specifically the Shark kernel extension – would write into that buffer, then when the buffer was full profiling would end2. The userspace driver – Shark – could technically start profiling again immediately, but of course you’d often have a gap in your profiling – potentially a big one, for the more expensive profiling modes (e.g. System Trace) or on heavily-loaded machines. Shark didn’t run with elevated priority, as far as I recall, so it could get crowded off the CPU(s) by other programs. All communication between user & kernel spaces was via Mach messages, which are ultimately syscalls, and was time-sensitive since kernel- & user-space had to be in lock-step all the time.

For Shark 5, we wanted to up the ante and remove limitations. We wanted to be able to record indefinitely, limited only by disk space. More importantly, we also wanted to be able show profiling results live.

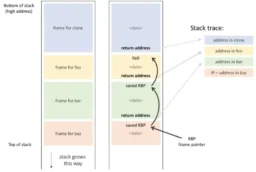

So, we – and I use the term loosely, as I think it was mostly Rick Altherr with possibly the help of Ryan du Bois – came up with a mechanism that’s very similar to io_uring, but years earlier, in ~2008. Userspace would allocate multiple smaller buffers – also easier to acquire given the requirement to be physically contiguous – and hand those to the kernel somewhat as needed. Userspace merely needed to stay ahead of the kernel’s use, which didn’t necessarily mean pre-allocating all the buffers. When each of those individual buffers filled up with profiling data from the kernel, they’d be made available back to the userspace side3. Shark (in userspace) would optionally – if in ‘live’ mode or if running short on empty buffers – process those buffers while profiling was still actively occurring, freeing them up to go back into the ring for the kernel’s use. It did mean you slightly increased the probability of perturbing the program(s) under profiling, but that was mostly just a matter of ensuring the buffers were large enough to sufficiently amortise the cost of their processing & handling. I don’t recall the extent of the processing, but I think it was not necessarily much more than copying the data out into non-wired memory (maybe even a memory-mapped file?). That was cheaper than allocating and wiring new buffers.

It worked really well, and is possibly still the implementation used to date – while it appears a lot of the frameworks (e.g. CoreProfile) have disappeared from macOS, presumably rewritten somewhere else, I’d be surprised if the kernel-user interface itself has changed much. It was pretty much perfect.

The creation of this new, superior profiling system also spurred me to create rample, a CLI tool kind of like top which showed you what your CPUs were doing in real time4 as a heavy tree, much like you’d get in a Time Profile in Shark (and later Instruments). It was super quick to write – just a day or two, I believe – and yet I unexpectedly found that it was more useful than Shark itself. Being able to see – practically instantly by virtue of how lightweight it is to launch a simple CLI program – what’s chewing on the CPU, including inside the kernel, was incredibly useful. Instruments is the closest you can get today on a Mac, but it’s super slow and clunky in comparison. It’s one of my top regrets, of my time at Apple, that I didn’t get rample into Mac OS X, or at least into the Dev Tools.

- I don’t recall precisely why, but it was apparently better (or only possible?) to allocate the buffer in userspace, even though that was ultimately done with syscalls serviced by the kernel. I think it had something to do with being easier to handle failures, like being out of memory, in particular contiguous memory – the memory was wired during use to ensure the kernel wouldn’t have to deal with the practical nor performance problems of page faults while recording profiling data. ↩︎

- Which was a problem because generally profiling sessions were specified to run for a specific time duration, by the end-user. So the buffer had to be sized to avoid filling prematurely, which meant wasting memory. ↩︎

- Technically they were always available – we didn’t bother changing access permissions – but the userspace app would coordinate with the kernel via an elegant lockfree state machine. That state machine was a superb design – it deserves its own detailed nostalgia trip. ↩︎

- Well, updated every second or so, like

top. ↩︎