Contents

NSImage is formally documented as largely not thread-safe:

The following classes and functions are generally not thread-safe. In most cases, you can use these classes from any thread as long as you use them from only one thread at a time. Check the class documentation for additional details.

Apple’s Threading Programming Guide > Appendix A: Thread Safety Summary, subsection Application Kit Framework Thread Safety

What “in most cases” means is left to the reader’s imagination. Apple adds a little addendum for NSImage specifically:

One thread can create an

NSImage RestrictionsNSImageobject, draw to the image buffer, and pass it off to the main thread for drawing. The underlying image cache is shared among all threads. For more information about images and how caching works, see Cocoa Drawing Guide.

For a start, it’s talking only about creating an NSImage from scratch, not loading it from serialised form (e.g. a file, a pasteboard, etc). It doesn’t even deign to mention those other, much more common cases.

And even for that one mentioned use case, what does it mean, exactly? What “image cache” is it referring to?

I don’t have authoritative answers. The documentation is so infuriatingly vague that Apple could do basically anything to the implementation, between macOS updates, and claim to have broken no promises.

What I do have is some empirical data and the results of some reverse engineering (shout out to Hopper), from macOS 14.2 Sonoma.

NSImage 101

NSImages can represent a wide range of imagery. Most uses of them are probably for bitmap data (i.e. what you find in common image formats like WebP & AVIF), but NSImage also supports ‘raw’ images (e.g. Nikon NEF) as well as vector data (e.g. SVG & PDF). It also has a plug-in mechanism of sorts, so the supported image formats can be extended dynamically at runtime.

You can fetch the full list of supported types from NSImage.imageTypes – although it doesn’t distinguish between those it can read vs those it can write. Fortunately, sips --formats in Terminal gives you the same list with additional metadata. On my machine that list happens to be:

Supported Formats: ------------------------------------------- com.adobe.pdf pdf Writable com.adobe.photoshop-image psd Writable com.adobe.raw-image dng com.apple.atx -- Writable com.apple.icns icns Writable com.apple.pict pict com.canon.cr2-raw-image cr2 com.canon.cr3-raw-image cr3 com.canon.crw-raw-image crw com.canon.tif-raw-image tif com.compuserve.gif gif Writable com.dxo.raw-image dxo com.epson.raw-image erf com.fuji.raw-image raf com.hasselblad.3fr-raw-image 3fr com.hasselblad.fff-raw-image fff com.ilm.openexr-image exr Writable com.kodak.raw-image dcr com.konicaminolta.raw-image mrw com.leafamerica.raw-image mos com.leica.raw-image raw com.leica.rwl-raw-image rwl com.microsoft.bmp bmp Writable com.microsoft.cur -- com.microsoft.dds dds Writable com.microsoft.ico ico Writable com.nikon.nrw-raw-image nrw com.nikon.raw-image nef com.olympus.or-raw-image orf com.olympus.raw-image orf com.olympus.sr-raw-image orf com.panasonic.raw-image raw com.panasonic.rw2-raw-image rw2 com.pentax.raw-image pef com.phaseone.raw-image iiq com.samsung.raw-image srw com.sgi.sgi-image sgi com.sony.arw-raw-image arw com.sony.raw-image srf com.sony.sr2-raw-image sr2 com.truevision.tga-image tga Writable org.khronos.astc astc Writable org.khronos.ktx ktx Writable org.khronos.ktx2 -- Writable org.webmproject.webp webp public.avci avci public.avif avif public.avis -- public.heic heic Writable public.heics heics Writable public.heif heif public.jpeg jpeg Writable public.jpeg-2000 jp2 Writable public.jpeg-xl jxl public.mpo-image mpo public.pbm pbm Writable public.png png Writable public.pvr pvr Writable public.radiance pic public.svg-image svg public.tiff tiff Writable

Sidenote: notice how it supports modern formats like WebP, AVIF, and JPEG-XL, but only for reading, not writing. 😕

It used to be that NSImage was pretty much a one-stop-shop for typical app image I/O needs, but Apple have for some reason crippled it over the years. I feel like this is part of a larger trend of Apple providing increasingly less functionality, more complexity, and less coherence in their frameworks.

An NSImage can have multiple ‘representations‘. These are essentially just different versions of the image. One representation might be the original vector form (e.g. an NSPDFImageRep). Another might be a rasterisation of that at a certain resolution. Yet another might be a rasterisation at a different resolution.

It gets more complicated, however, because some representations are merely proxies for other frameworks’ representations, like CGImage, CIImage or somesuch.

Nonetheless, for the simple case of a static bitmap image read from a file, generally NSImage produces just one representation, which is the full bitmap (as an NSBitmapImageRep). That’s the only case I’m going to discuss here, for simplicity’s sake (though likely the lessons apply to the other cases too).

Accessing / using an NSImage

Generally to use an NSImage you need a bitmap representation. e.g. to actually draw it to the screen.

Unless you manually create an NSImage from an in-memory bitmap, the bitmap representation is not loaded initially, but rather only when first needed.

☝️ You can usually obtain the NSBitmapImageRep (via e.g. bestRepresentation(for: .infinity, context: nil, hints: nil)) even before it’s actually been loaded – initially it’s largely just a shell, that knows its metadata (e.g. dimensions and colour space) but not yet its actual imagery.

Ultimately the load is triggered when something asks for the raw bytes of the bitmap (either you, directly in your code, or indirectly when you e.g. ask AppKit to draw the image).

Fetching the raw bytes of a bitmap from NSBitmapImageRep is not a trivial exercise. I’m going to gloss over the complexities (like planar data formats) and just talk about the common case of single-plane (“interleaved channels”) bitmaps.

For those, you can access the raw bytes via the bitmapData property.

Nominally, bitmapData just returns a uint8_t*.

In fact, bitmapData calls a lot of internal methods in a complicated fashion, with many possible code paths. What exactly it does depends on the underlying source of data, but in a nutshell it checks if the desired data has already been created / loaded (it’s cached in an instance variable) and if not it loads it, e.g. by calling CGImageSourceCreateImageAtIndex.

That’s what makes NSImage dangerous to use from multiple threads simultaneously.

There is no mutual exclusion protecting any of these code paths.

Reading the cached bitmap data is essentially read-only (just some retain/autorelease traffic) so it’s safe to call from multiple threads concurrently (as long as something ensures the NSBitmapImageRep is kept alive the whole time and not mutated)

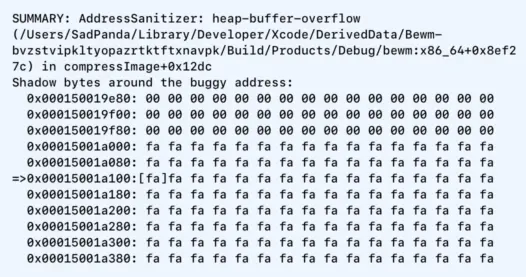

But loading or modifying it is not. As such, if you call bitmapData from multiple threads concurrently, and you don’t know for sure that it’s already fully loaded, you get a data race (also known as a “WTF does my app crash randomly?!” condition).

The consequences of that race vary. Maybe you “win” the race – one thread happens to run virtually to completion of bitmapData first, storing the fresh backing data into the caching instance member, and then all the other threads run and just return that same value – the ideal situation as everything works as intended.

Maybe you “lose” the race: every concurrent thread checks simultaneously and sees there’s no cached value, so they all – in parallel and redundant to each other – load the bitmap data and store it into the cache. They each return the one they created, even though ultimately only one thread wins – the last one to write into the cache – and the duplicate bitmap data that all the earlier threads created is deallocated. Even though pointers to them have been returned to you. And you might be in the middle of using them. Causing you to crash with a memory protection fault (or worse, read from some random other memory allocation that happened to be placed at the same address afterwards, reading essentially garbage).

Hypocritically, this is because Apple don’t follow their own rules on escaping pointers:

The pointer you pass to the function is only guaranteed to be valid for the duration of the function call. Do not persist the pointer and access it after the function has returned.

Apple’s Swift Standard Library > Manual Memory Management > Calling Functions With Pointer Parameters, subsection Pass a Constant Pointer as a Parameter.

The source code for the relevant NSBitmapImageRep methods is essentially:

- (uint8_t*)bitmapData {

uint8_t **result = nil;

[self getBitmapDataPlanes:&result];

return *result;

}

- (void)getBitmapDataPlanes:(uint8_t***)output {

[self _performBlockUsingBackingMutableData:^void (uint8_t* dataPlanes[5]) {

*output = dataPlanes;

}];

}🤦♂️

The

bitmapDataproperty andgetBitmapDataPlanesmethods are fundamentally unsafe.

Unfortunately, while _performBlockUsingBackingMutableData and its many similar siblings can be made safe, they are (a) all private and (b) not currently enforcing the necessary mutual exclusion. This could be corrected by Apple in future (although I wouldn’t hold your breath).

Nominally the colorAt(x:y:) method could also be safe, but it currently lacks the same underlying mutual exclusion – and even if it didn’t, the performance when using it is atrocious due to the Objective-C method call overhead. Even utilising IMP caching, the performance is still terrible compared to directly accessing the bitmap byte buffer.

Why does it matter that NSImage is not thread-safe?

The crux of the problem is that you often have no good choice about it, because NSImage is a currency type used widely throughout Apple’s own frameworks, and you often have no control over what thread it’s created or used on.

For example, even using the very latest Apple APIs such as SwiftUI’s dropDestination(for:action:isTargeted:), you cannot control which thread the NSImages are created on (it appears to be the main thread, although that API provides no guarantees).

Similarly you have no control over where those images are used if you pass them to 3rd party code – including Apple’s. e.g. Image(nsImage:); possibly that only uses them on the main thread, but it might be pre-rendered the image a separate thread for better performance (to avoid blocking the main thread and causing the app to hang). In fact it should, in principle.

Loading an image – actually reading it from a file or URL and decompressing it into a raw bitmap suitable for drawing to the screen – should never be done on the main thread, because it can take a long time. A 1 GiB TIFF takes nearly 30 seconds on my iMac Pro, for example (and TIFF uses very lightweight compression, so it’s a relatively fast-to-read format). Anything involving the network could take an unbounded amount of time. Even small files – like a 20 MiB NEF – can take seconds to render because they are non-trivial to decode and/or decompress.

So you’re screwed on multiple levels: not only can you generally not guarantee what thread NSImage is born on nor used on, you can’t use it exclusively on the main thread because that will cause a terrible user experience.

What can you do?

In simple terms, the best you can realistically do is try ensure that all modifications to the NSImage (including implicit ones, such as loading a bitmap representation upon first use) happen exclusively in one thread [at a time]. For example, in my experiments, putting a lock around otherwise concurrent calls to bitmapData is enough to prevent any data races. Although I am mystified as to how I can still let the main thread draw the image concurrently without any apparent problems. 🤔

If you want to play it really safe, you have to create & pre-load each NSImage on a single thread (not the main thread), before ever sending it to another isolation domain. That means manually reimplementing things like drag and drop of images (because you can no longer work directly with NSImage with any drag & drop APIs, you have to instead use only – and all – the things that could potentially be images, like URLs or data blobs, and then translate those to & from NSImages manually).

Complicating all this is that NSImage is unclear about how its caching works. For starters, does this caching apply to the representations or is it a hidden, orthogonal system? Is it thread-safe? Etc.

You can supposedly modify the caching behaviour via the cacheMode property, but in my experience there is no apparent effect no matter what it is set it to (not on the image’s representations nor on render performance in the GUI).

It’s a shame that NSImage has been so neglected, and has so many glaring problems. Over the years Apple have seemingly tried to replace it, introducing new image types over and over again, but all that’s done is made everything more complicated.

You might find this interesting, look for “NSImage: Clarifying the contract for threading” in https://developer.apple.com/library/archive/releasenotes/AppKit/RN-AppKitOlderNotes/index.html

Ah, thanks for that. That’s interesting, as it’s not correct (or at least no longer), as my post highlighted –

bitmapDatais not an “explicitly mutating method” neither in name, intuition, nor documentation, yet it is not thread-safe, contrary to what those release notes say.That section below that, though, is helpful (“NSBitmapImageRep: CoreGraphics impedance matching and performance notes”). It confirms what I’d suspected based on disassembly and backtraces, that

NSBitmapImageRepis usually a thin wrapper over aCGImage.And I suppose you could try to read between the lines – taking both those sections in mind – to deduce that maybe

bitmapDataet al are in fact mutating as an implementation detail because apparently they require copying the data out of theCGImage(but apparently in a way not privy to the thread-safety that other mutations are, like caching scaled & format-converted versions for screen rendering).I know that Apple recommend just drawing the bitmap image to a bitmap context with known pixel format, rather than using

bitmapData, although I’m not convinced that’s the most efficient way in some cases – I haven’t reverse-engineered or benchmarked it exhaustively, but I do that sometimes in one of my current projects, and it’s slower. 🤔