Today was one of those days where you plan to real make a dent in your todo list, and end up spending the entire day debugging why the hell some images are suddenly rendering as completely opaque black.

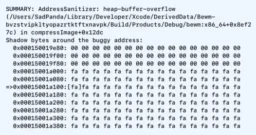

Long story short, on at least some bitmap images, as soon as you call getBitmapDataPlanes it somehow permanently breaks that NSImage and NSBitmapImageRep. The telltale sign of this – aside from the image rendering incorrectly – is the stderr message from AppKit:

Failed to extract pixel data from NSBitmapImageRep. Error: -21778

This definitely occurs with 10-bit AVIFs, but it’s not limited to that bit depth nor that file format, because I found the one other mention of this error message online, where the source image was 1-bit.

I’ve never seen this happen with 8-bit, 12-bit, or 16-bit images, AVIF or otherwise.

This makes me suspect it’s tied to the internal pixel format – 10-bit AVIFs always load as 10 / 40 (bits per sample / bits per pixel), irrespective of whether they have an alpha channel. 8-bit AVIFs are always 8 / 32, 12-bit are always 12 / 48. Maybe NSBitmapImageRep has a bug when the bits per pixel isn’t a multiple of 16?

If you call bitmapData instead you get the same error message but it does not stop the NSImage / NSBitmapImageRep from rendering correctly. But the pointer returned from bitmapData points to opaque black. It’s particularly weird that it returns a pointer to a valid memory allocation, of at least the expected size, yet the contents are nothing but zeroes. Seems like it pre-allocates some output buffer, as zeroed memory, and then fails to actually write to that buffer. Yet it returns it anyway, instead of returning nil. 😕

FB13693411.

Partial workaround

If you call recache on the NSImage afterwards, the image is capable of rendering correctly again. That doesn’t help if your objective is to access the bitmap bytes, but at least if you don’t – e.g. you’re encountering this only because some library code is triggering the bug – you might be able to work around it through recache.