This morning I tested out Apple’s Vision Pro in an Apple Store. And I’ve decided to write about it, mostly for my own future nostalgia, but also because my experience was markedly different to what’s been widely reported in tech news.

I had intended to just buy an Apple Vision Pro on release day, but by the time I woke up and went to Apple’s online store – a couple of hours after pre-orders opened – they were showing a nearly two month shipping delay (and no in-store pick-ups at all). So I figured there was no point ordering then; like it or not I’d have plenty of time to see what others think first.

Of course, it turns out it was not in fact massively out of stock. I later found out that people had ordered theirs later that day, or even the next day, and were still included in the very first shipment. Nobody had to wait two months for theirs, even if they ordered weeks later. Apple’s online store was full of shit.

But I’m glad for it, because as soon as I was resigned to not ordering one, I was at ease with that decision. I felt oddly relieved. Having since read & listened to many people’s impressions & commentary, over the last month, my confidence in that decision only increased. And now that I’ve tried one for real, I am certain of it.

The Good

Ease of use

I found the interface to be pretty intuitive. Eye tracking worked very well, and gesture recognition was fairly reliable – neither were perfect, but then mouse tracking and “the key I meant to press” tracking isn’t perfect either. 😉

It’s hard to say with any confidence from such a short use of the Vision Pro, but my impression is that its eye & gesture tracking is at least as accurate as touch on iPhones, iPads, and Apple Watches. My Apple Watch & iPhone routinely reject my taps just to spite me (they animate the GUI to show that they know that I did tap, yet they refuse to accept it).

Which is to say, I suspect it would annoy me at times with its errors but I don’t think it’d be a barrier to long-term use.

Sound

One thing which I did not remotely anticipate is how good the sound isolation is – even more astounding given there’s nothing in or on your ear to provide a physical barrier. There were dozens of people in the Apple Store, including several pairs right around me going through their own Vision Pro demos or iPhone upgrades, and with the headset on I barely registered any of their conversations.

I find it hard to believe it’s due to traditional noise cancellation methods, just given the physical position and arrangement of the speakers, so this might be as much a psychological ‘trick’ as anything. In any case, it is effective.

I was also impressed by how well 3D audio worked (and how good the sound quality was in general). Better than AirPods (and Beats Pros) in my experience, although that’s a low bar.

I’m less sure how the Vision Pro’s speakers stack up against real headphones – even my aging Sony MDR-ZX780DCs – but it’s at least a reasonable comparison, which is impressive given the Vision Pro’s speaker’s form factor.

Real world view

When the Vision Pro was first announced I was a little disappointed that it uses cameras to ‘fake’ transparency, rather than using genuine optical transparency. Nonetheless, I was happy to see that the effect is mostly sufficient.

It wasn’t hard to find flaws if you looked – the cameras are not correctly placed to actually see what you see, for example, so any objects closer than about a metre have noticeable parallax errors. That’s very noticeable if you do something as simple as move your hands in front of you, even at arms length.

Yet, looking around the real world worked fine in practice. There is perceptible lag, but only barely – not enough to really cause any issues; you’re not going to accidentally walk into moving objects, for example, and you could probably even play [real] sports with the Vision Pro on (although you wouldn’t be doing yourself any favours).

I should note that it was in no way realistic because you’re clearly looking at a resolution-limited computer screen (more on that later). And I didn’t even test things like dynamic range or optical aberrations of the lenses, as I was in a very evenly lit and low-contrast Apple Store.

Immersive experiences

I didn’t get to do the butterfly & dinosaur one, which is a shame because by all accounts it’s particularly good, but I got the quick demo reel of spherical videos (along with a couple of “3D” photos & videos). It was a bit hit & miss (more on that in later sections), but there were a few moments where I was actually pretty pleased with the experience. I’ve visited Haleakalā a few times but never seen the crater – the weather has always conspired against me – so I actually got lost for a minute or so just enjoying that view. The subtle animation of the mist drifting up & down the crater walls was a sublime touch. Likewise the gentle rain on the lake near Mount Hood.

The 3D video of a kid blowing out the candles on their birthday cake worked relatively well. The feeling of depth was nice – aided by the cake being very close to the camera and the use of a wide angle lens. I suspect wide-angle photos are much more amenable to the “3D photo” effect (they’re often described as more immersive even in plain 2D – although I think that effect is exaggerated by many people).

Human intrusions

The way people very subtly appear when you look in their direction while in full VR mode was quite nice. I like that they are visible but remain faint apparitions. I had received the mistaken impression from second-hand accounts that intruders appeared largely opaquely; much more obtrusively. The implementation strikes a good balance in providing awareness – and facilitating communication – without interrupting more than necessary.

Physical comfort

It’s of course very hard to deduce the real-world comfort of the Vision Pro from a mere fifteen minute session, but for whatever it’s worth I didn’t have any issues regarding weight, size, or contact. The headset certainly wasn’t unnoticeable, but I had no issues forgetting about those aspects while using it.

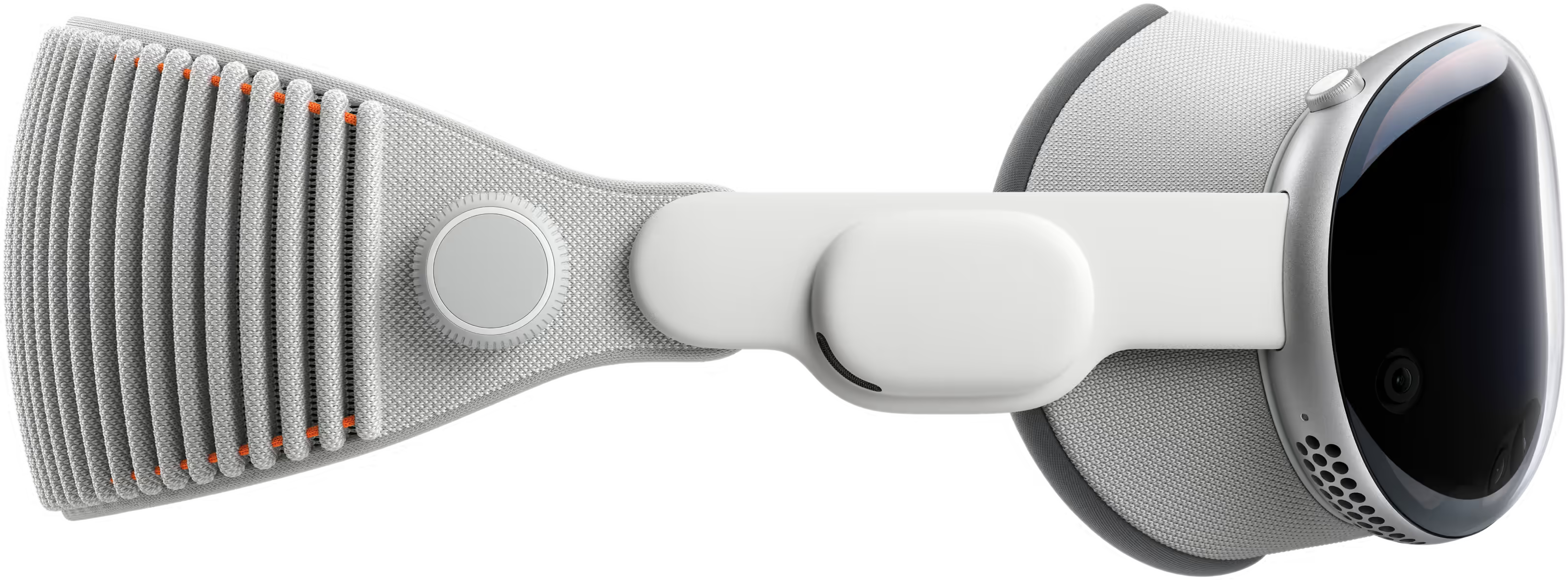

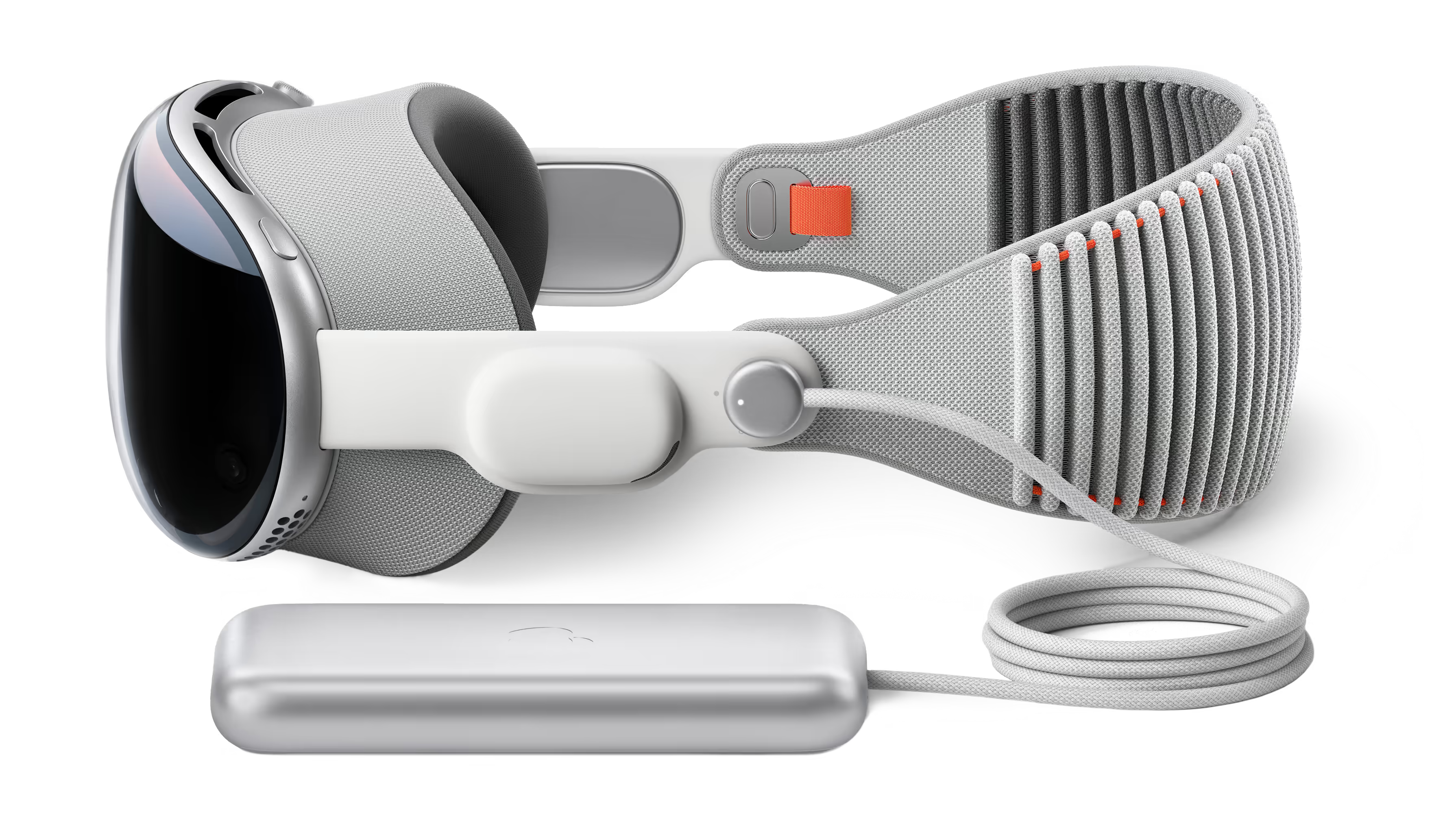

Also, it wasn’t until I was mostly done writing this article that I even remembered that the Vision Pro has a cable sticking out of it. The battery pack stayed on the table in front of me, and the only time I noticed the cable – even in the slightest – was when putting the unit on (merely because I had to make sure the cable wasn’t tangled around my head). I was expecting to feel the cable tugging and pushing on the headset during use, but did not.

This is in stark contrast to the power cable on my MacBook Air which is constantly getting snagged and yanked by my apparently villainous couch. So I’m very curious how the Vision Pro would work in a more typical environment, rather than at the Apple Store with basically nothing but empty space around me as I sat on a stool.

Minimal internal lens flare & reflection

At least in the bright Apple Store, I didn’t find the lens flare & reflections to be distracting. They are present, but I really only noticed them on the very first screen, where you’re in a black void and thus of course any such optical imperfections are most visible.

This is pretty good, by my estimation, since I find sunglasses to be irritating due to seeing the reflection of my own eyeballs in them. The Vision Pro has the big benefit of the [mostly] enclosed mask, to largely eliminate external sources of light. So it should have far fewer issues with flare & reflections, as a matter of principle.

The main reflection I did notice – and had a slightly harder time ignoring – was the glow of the screen on the inside of the light shield. It’s a pity Apple used a grey, textured material for the interior, rather than something dark like Musou black fabric.

Still, unless you plan to work in a very dark or high-contrast VR environment – perhaps the moonscape that I tried briefly during the demo – you should be fine in this respect.

Real world geometry is no limit

Folks have unanimously stated that – surprising or not – there’s no visual incongruence with pushing AR elements “through” real-world ones. One of the first tests I did was to grab a window and shove it through the table in front of me. Despite clearly violating all logic, my eyes & brain apparently had no issue with it – it didn’t feel wrong, or weird, or uncomfortable.

So – while I didn’t test it – I can easily believe that you can indeed watch a movie on a [virtually] cinema-sized screen despite being stuck in cattle class on a plane. And I can imagine it would be a genuine emotional benefit to have that feeling of so much more personal space, even though it’s “fake”.

Honestly, if I were to get a Vision Pro its use as an aid to commercial air travel might actually be one of the most justifiable reasons.

The Bad

Buggy demo units

My time actually using the Vision Pro was significantly shortened by the demo unit refusing to reset properly. It took three attempts – and a consultation between Apple Store staff – before they finally got it to work properly.

On the first two usage attempts it went straight from the “hold down the crown button to shift the lenses” to the home screen, bypassing eye tracking calibration. It didn’t seem particularly unusable without proper calibration, but my Apple handler refused to start the demo without it.

Bad light seal

Perhaps it’s just me and my apparently tiny nose, but there was a big gap between the unit and my nose. I could easily look through and see the table in front of me, my own lap, etc. This surprised me given Apple themselves have put a lot of emphasis on the importance of a good fit and no light leaks in order to have the proper experience.

However, in use I didn’t find it much of an issue – I wasn’t distracted nor blinded by the light leaking in. I think as long as my focus was actually on the screens of the Vision Pro, and my attention on whatever I was looking at within them, it was okay. Although, just like the glare & reflections within the lenses, it may not be so easy to ignore in a dark VR environment.

Non-immersive experiences

Most of the 3D photos & videos I saw didn’t do much for me. I can clearly see the appeal in theory, but the implementation on the Vision Pro is frustrated by several factors.

For a start a lot of the 3D photos – and especially videos – were blurry outside of the centre1. Really blurry, in the case of the Alicia Keys clip – she was reasonably in focus but almost everything else was way out of focus. I think in the original recording, although I guess I can’t be sure that it wasn’t buggy foveated rendering or somesuch.

Second, even when they weren’t blurry, they were often low resolution. Hard to say whether this is because of the Vision Pro itself (more on this later) or with the source materials.

I was hoping that the panoramas would be a big (pleasant) experience, as I’m quite a fan of taking panoramic photos (and photo spheres) even though you can’t really view them well on fixed displays. In a way I’ve been waiting and preparing for VR goggles for decades. Heck, I was super excited when Quicktime VR was released, even though it turned out to unfortunately be decades ahead of its time.

Yet, I was a bit disappointed with the panorama experience on the Vision Pro. I’m not entirely sure why, although I know one obvious reason was that you can see the edges. Any illusion of being there is rattled when the edge of the photo appears in view. Photo spheres are the better way to go.

Seeing the edges might sound trite – after all, we see the edges of photos and videos all the time on our existing displays; what’s the problem? It’s a good question – I don’t know if I can explain it, I just know what I felt. Perhaps it’s an uncanny valley sort of problem – because you are in a nominally immersive, VR environment, it matters so much more when the illusion is shattered. Perhaps it’s something you get used to?

In any case, even for photo spheres the feeling of immersion is compromised by the field of view being way too small…

Limited field of view

I had heard that the Vision Pro has a limited field of view – even compared to its contemporaries – but I was still surprised to see what that’s actually like. It’s even more limited than I expected. It’s like wearing bad goggles – blinkers, perhaps. A kind of tunnel vision. I’ve heard it said that you “just” need to keep your eyes centred and move only your head instead, but even looking dead ahead I clearly perceived the outline of the screens, and it took some effort to ignore that (mostly successfully).

As noted earlier, this kinda ruined any would-be feeling of immersion for me, in most cases. You’re not much more “there” than you are viewing a photo in a picture frame (or on a traditional screen), or watching a video on a TV placed too far away.

Everything’s too close & big by default

I was surprised that windows open way too close (in perceived depth) and too big (in field of view) by default. I was constantly manually resizing things and pushing them away from me, in order to actually be able to see them [fully] and comfortably.

I do not like having to move my head just to look around a single window, it turns out.

I also found it quite unintuitive that windows enlarge as you push them away, maintaining the same angle of view. It was not only annoying – since the whole point of pushing them away was to make them smaller and feel less claustrophobic – but it made it hard to actually judge if & to what degree they were moving. More than once I repeated a “get back” window movement because it seemed like the first try was silently ignored (and honestly, I can’t be sure it wasn’t – that’s the point).

3D movies

I watched the brief trailer for Super Mario Bros. I didn’t really get much of a 3D effect, but I did get the very limited field of view (see prior point) and poor visual quality (see below). I’m actually a bit bullish on 3D movies, but I think we’re still just not there technologically2.

I do like to have a big screen – such that my vision is pretty filled by a movie – but apparently I really don’t like it when there’s a black mask over the outskirts of the movie. Even if it’s only visible in my peripheral vision.

Possibly I’d get used to this in time, but of course I don’t really want to – I should be able to see the whole movie. Otherwise, what’s the point? If the director wanted me to see only a subset of the view, they’d have filmed it that way.

I presumably could also have manually moved the window back – and I did for some of the other videos I watched – but it’s just not practical to have to do that for every video I ever watch.

Perhaps the problem is in taking existing movies – designed for a relatively tiny field of view (≤40° typically) – and naively shoving them in your face in the name of immersion. Maybe what we need is to add new content around the existing frame. Despite our huge angle of vision, our focus area is actually quite small. It’s uncomfortable and confusing to have to look around frequently and rapidly just to make sense of a movie.

The Ugly

Very blurry & low resolution

I was really surprised to see pixels. Immediately. Even though I was already aware – mainly from John’s stumbling over the topic on ATP – that the PPD (Pixels per Degree) is actually quite poor on the Vision Pro, at just 343. A “Retina display” at typical viewing distances is around 100. Even an ancient non-Retina Apple display is about 50.

In fact, 34 is about the same as an original Macintosh from 1984. 😳

I found this handy calculator for determining PPD, in case you want to estimate for your own devices. If you have a 5k 27″ monitor, for example, but typically sit with your nose about 4cm from it, then you’re already used to the Vision Pro’s display resolution4.

For reference, the human eye is apparently limited to about 128 PPD at best. That seems plausible just based on my own experience – I can’t really see individual pixels at ~100 PPD with my iMac Pro, for example, but my eyesight’s not perfect and that 128 number assumes absolute best-case conditions for distinguishing detail, which perhaps isn’t the typical reality.

Furthermore, unlike the original Macintosh’s screen – which at least had quite crisp pixels – the Vision Pro is blurry as well. That surprised me less – I figured there might be some calibration required, which was perhaps unintentionally skipped by the buggy demo unit. But my Apple Store handler didn’t seem to think so, yet seemed surprised by my comments (that the view was pixelated and blurry). He had no real answer to that. He implied (by omission) that my experience was normal. 😕

Jittery

The other thing I noticed within a literal second of using the unit is that the artificial visuals jitter – jump randomly about by a pixel or two. All the time. It’s less noticeable in full VR mode where you have no objective reference in the form of real world objects, but in AR mode everything displayed by the Vision Pro is shaking. I was able to mostly ignore it throughout the demo – but only with conscious effort. Any time I let my mind or vision wander in the slightest, the shaking immediately bothered me once more.

To be clear, this was while sitting perfectly still. I didn’t really test actual lateral movement of the headset, as I was asked to stay seated for the entire demo.

Since everything you see in the Vision Pro is technically artificial – it’s all from opaque LED screens, even the view of the real world that’s piped in view cameras – this jitteriness is baffling. I’m pretty sure it was the virtual objects that were moving, not the feed from the real world – just based on my own perception of what was and wasn’t moving – but I have no explanation for why that would be the case. While I could sense a tiny bit of a lag in the real world view, it seemed to remain correctly positioned (and stably positioned, more to the point) relative to objective reality.

It’s hard to say from just fifteen minutes of use, but I suspect this jittering would contribute significantly to eye strain.

Eye strain

When I took the Vision Pro off, my eyes were immediately assaulted by [comparatively] bright light and much sharper everything. It was both uncomfortable and a relief.

It took several minutes for my vision to de-blur. It’s a very similar experience to looking through the viewfinder on my Z9 for an extended period. And similarly it took a good couple of hours for my eyes to get fully back to normal, and to stop feeling strained.

Based on that parallel experience, it seems very clear that I cannot use a Vision Pro for any significant amount of time, without serious eye strain that causes lasting blurry vision and headaches. 😣

I’m assured by my optometrist that my vision is actually excellent – much better than average for my age, and I’m not that old yet anyway – and I’ve never needed glasses for anything5. So I don’t think the problem is me. I think the problem is quite apparent from the facts: the Vision Pro simply has a very low-resolution, unstable display that is uncomfortable to look at.

So now what?

Now I wait.

If I’d decided to develop apps for the Vision Pro, as a business choice, then it’d make sense to own one – it could be considered a dev kit; an early prototype. But for now I’m content not to. There doesn’t seem to be a rush – the app market is comparatively tiny.

For personal use it makes no sense to get a Vision Pro. Aside from its novelty factor and some very limited uses, it’s inferior to a Mac for almost all purposes (like productivity tasks, watching movies, reading books, video calls, etc).

Yet I’m hopeful that the AR and VR headsets’ times will come, eventually. Still at least several years from now, based on what I’m seeing with the Vision Pro. But one day.

I’ve been trying to discern if the Vision Pro is an iPhone moment or an iPad moment, since it was announced. I was bearish on the iPhone and bullish on the iPad, apparently at odds with the entire rest of the planet. And I’ve since conceded defeat entirely on the iPad – when I replaced my iPad Pro 13″ with a MacBook Air, it was such a relief – I finally had a device which I could use freely and broadly, rather than a glorified iPhone for web browsing and watching video. So I’m leery about getting too enthusiastic about a new class of device which clearly has some of the same existential challenges as the iPad.

One thing seems clear – it’s not a Mac moment. I don’t see anything with the Vision Pro that fundamentally changes the nature of computing, let-alone day-to-day life. But then, I don’t think it’s fair to expect it to – there’s only been one Mac moment so far.

- This might be just flaws in the demo material, although it’s hard to imagine Apple letting that fly. I have to assume the Vision Pro itself is the limitation here.

That said, in the “3D” video of the girl popping bubbles I saw what looked exactly like JPEG or MPEG compression artefacts (mainly blocking and over-smoothing). I can’t be certain whether that’s an issue in the video file or in its playback by the Vision Pro, but I would assume that graphical rendering glitches would appear somewhat differently…? ↩︎ - Most immediately, we lack the display technology to show a 4K (or better) video in 3D, whether via a headset like the Vision Pro or traditional means like coloured or polarised glasses. But that’s merely the easy part – we also need real-time rendering of the movie itself, in order to do proper foveated depth rendering – i.e. so that if you look at something in the foreground or background you can actually bring it into focus, even if the director hadn’t planned on that. As best I can tell practically nobody is even working on that yet. 😕 ↩︎

- Tangentially, it’s baffling how news media outlets like The Verge can shamelessly try to spin that as in any way good. Talk about drinking the Apple koolaid. 😠 ↩︎

- And if you can actually focus at that distance, then I applaud & hate you, because I can only reminisce fondly about those days. Stupid aging. 😔 ↩︎

- Although admittedly I can no longer focus on the end of my nose like I used to be able to do when I was a few years younger, so I know my descent into old-age far-sightedness has begun; I’m told I will need reading glasses at some point in my life (barring an unfortunate early exit). ↩︎