Rob Napier‘s short anecdote intrigued me, and I was curious if anything has changed in the fourteen months since. After all, that’s supposed to be eons in “AI” terms, right?

Spoiler: not much has changed. Kagi does a bit better, but Bing is still the best, Google still sucks, and ChatGPT (when considering Bing Copilot as well, that’s based on it1) is sometimes good, when it’s in the right mood.

Broadly, these results are pretty close to my general experience and impression. LLMs continue to be mediocre at best at factual work (but can be very interesting for creative work). I find Kagi usually does at least as good a job as Bing in terms of content relevance and correctness (and wins handily on the tie-breaker of not being based on a dodgy business model nor burdened by an ugly GUI).

As a side- but important note: it’s incredibly brazen that LLMs, like Bing Copilot, claim they’re not just monumental copyright infringement machines, when they produce results that accidentally include the raw markup from whatever they’re plagiarising, which they clearly do not understand and did not originate themselves in any ethical sense. It’d be funny – for its absurd ineptitude – if it weren’t so despicable.

☝️I use 1Blocker, so the results I see (as shown in the following screenshots) may not match what you see, if you use a different ad blocker (or insanely don’t use an ad blocker at all).

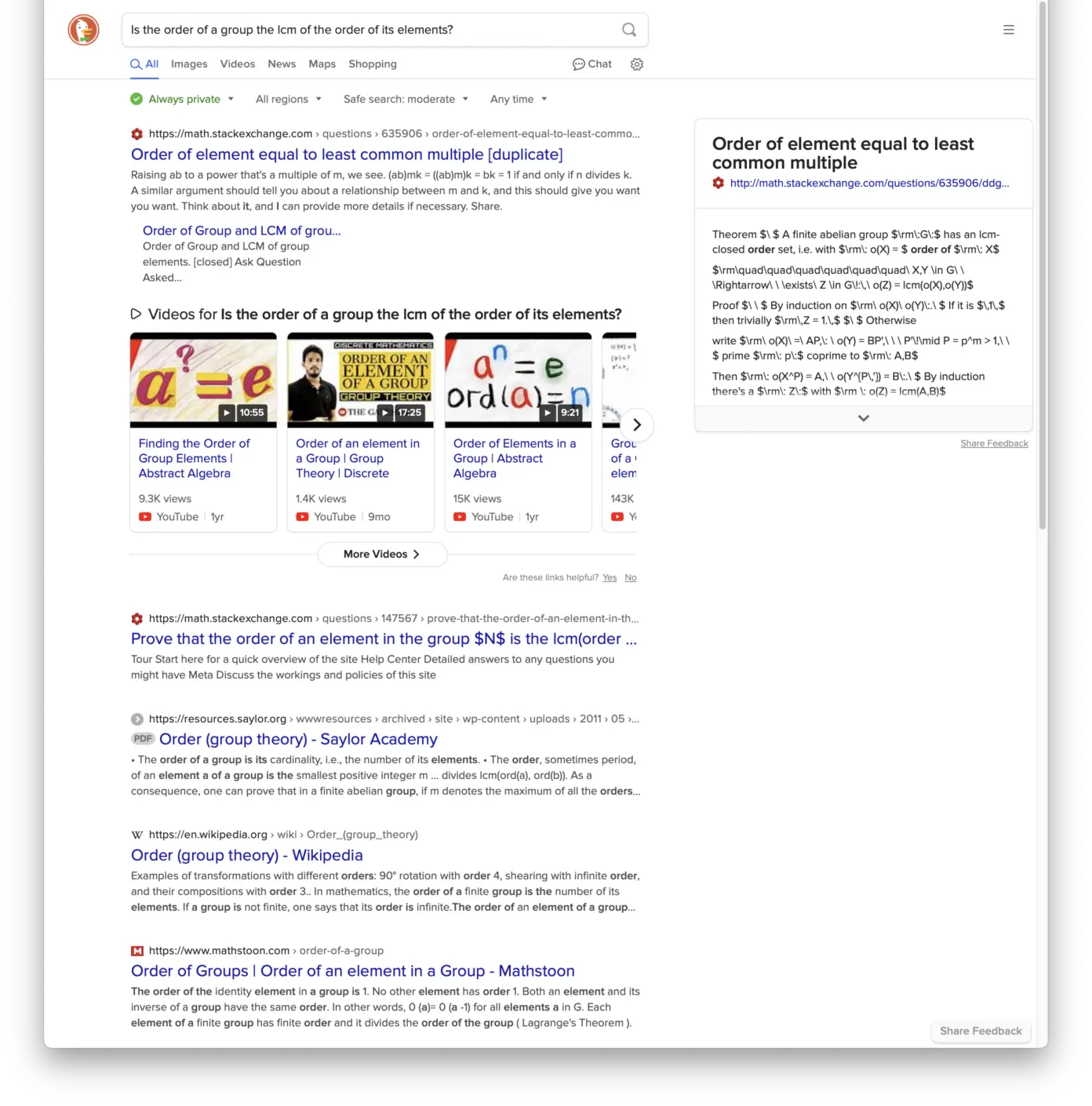

Kagi

I’ve been using Kagi for five months now (2,542 searches so far) and like it (mostly2). Kagi’s results aren’t always stellar, but it’s clean and pretty reliable and – like Rob – I like the simplicity of their revolutionary business model: they provide a service, that I pay for.

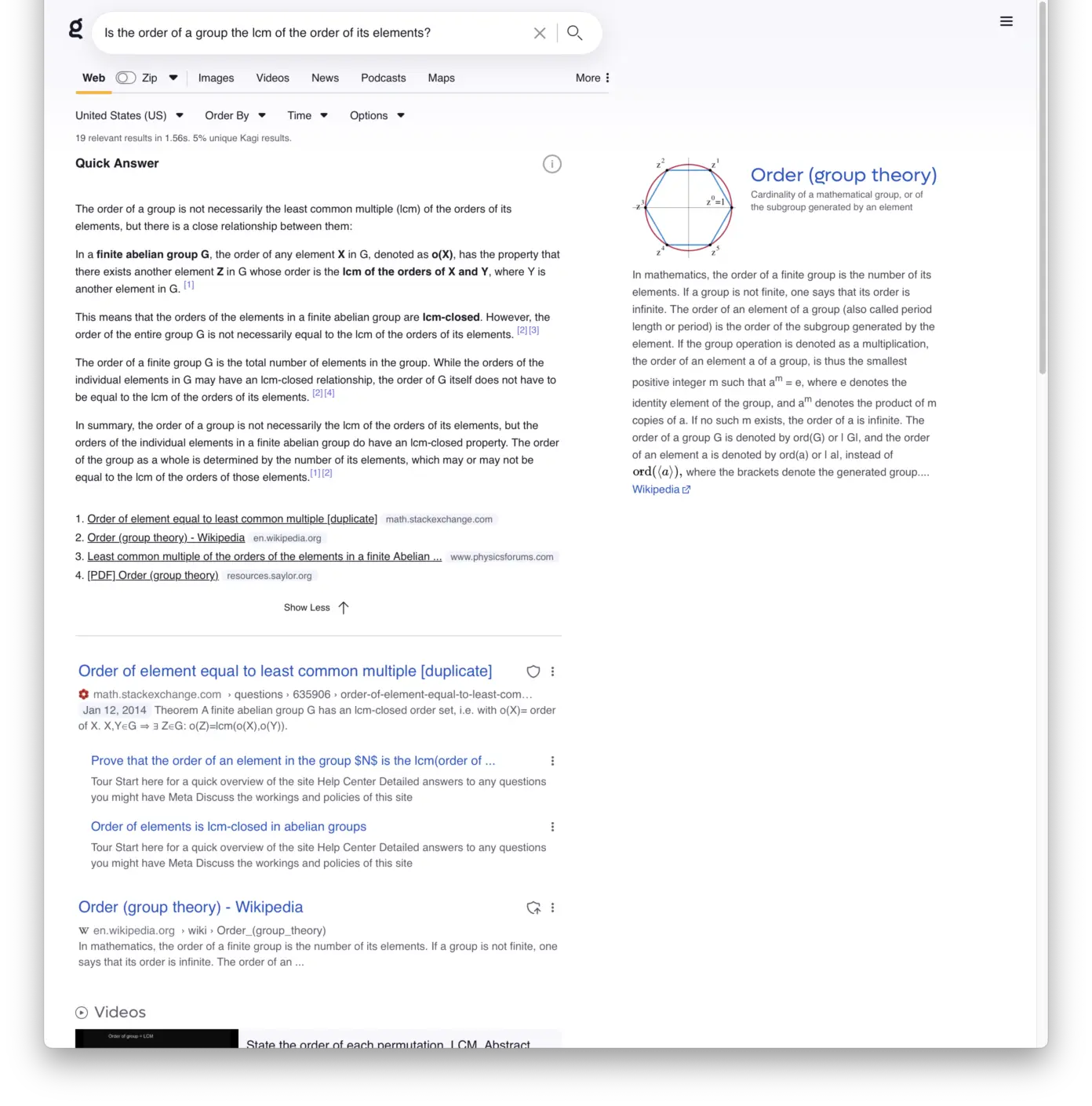

Because this query ends with a question mark, Kagi (as of earlier this year) adds its LLM-based “Quick Answer” section to the top of the results. Which isn’t all that helpful – it does technically give the answer, but only four paragraphs in. It does include citations, at least (although half of them are unhelpful, like most of its LLM-generated text).

☝️You can turn this “Quick Answer” functionality off in Kagi’s settings, if you like, although I find it’s convenient to simply control it case-by-case with the presence (or absence) of the trailing question mark. Because it accurately provides citations, based specifically on the search results you’re viewing, I find it’s often actually a good way to quickly figure out which results are the most relevant or useful.

The Wikipedia excerpt to the right provides the answer succinctly in its first sentence (and that same result is included organically in the third search result, but only barely makes it above the fold on my 27″ screen). Note that I’ve configured Kagi to prioritise results from Wikipedia (since in my experience those are, where available, greatly more likely to be useful than everything else – and I’m not the only one who thinks so).

The answer is at least on the first screen of search results, which is much better than Rob saw a year ago.

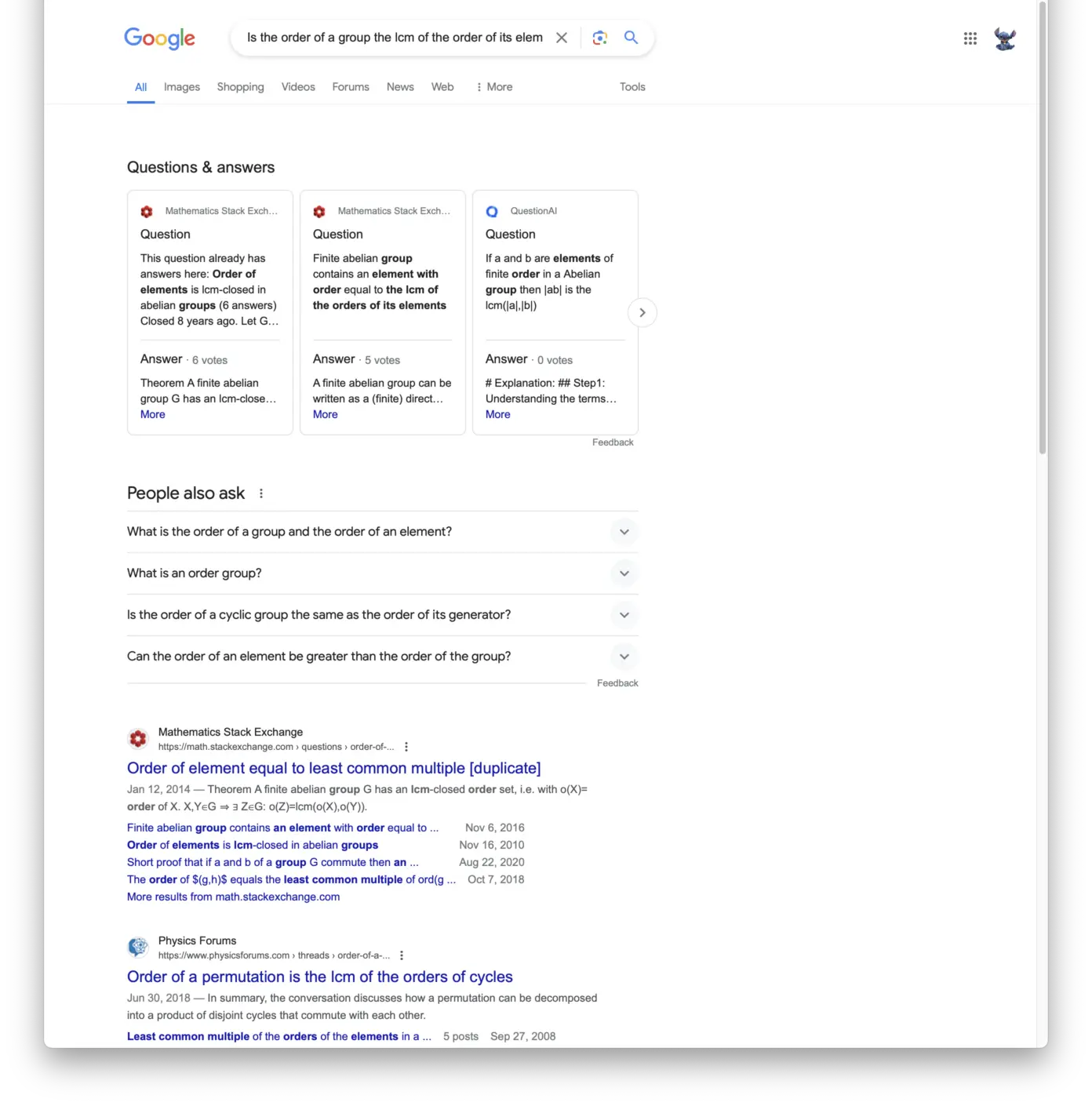

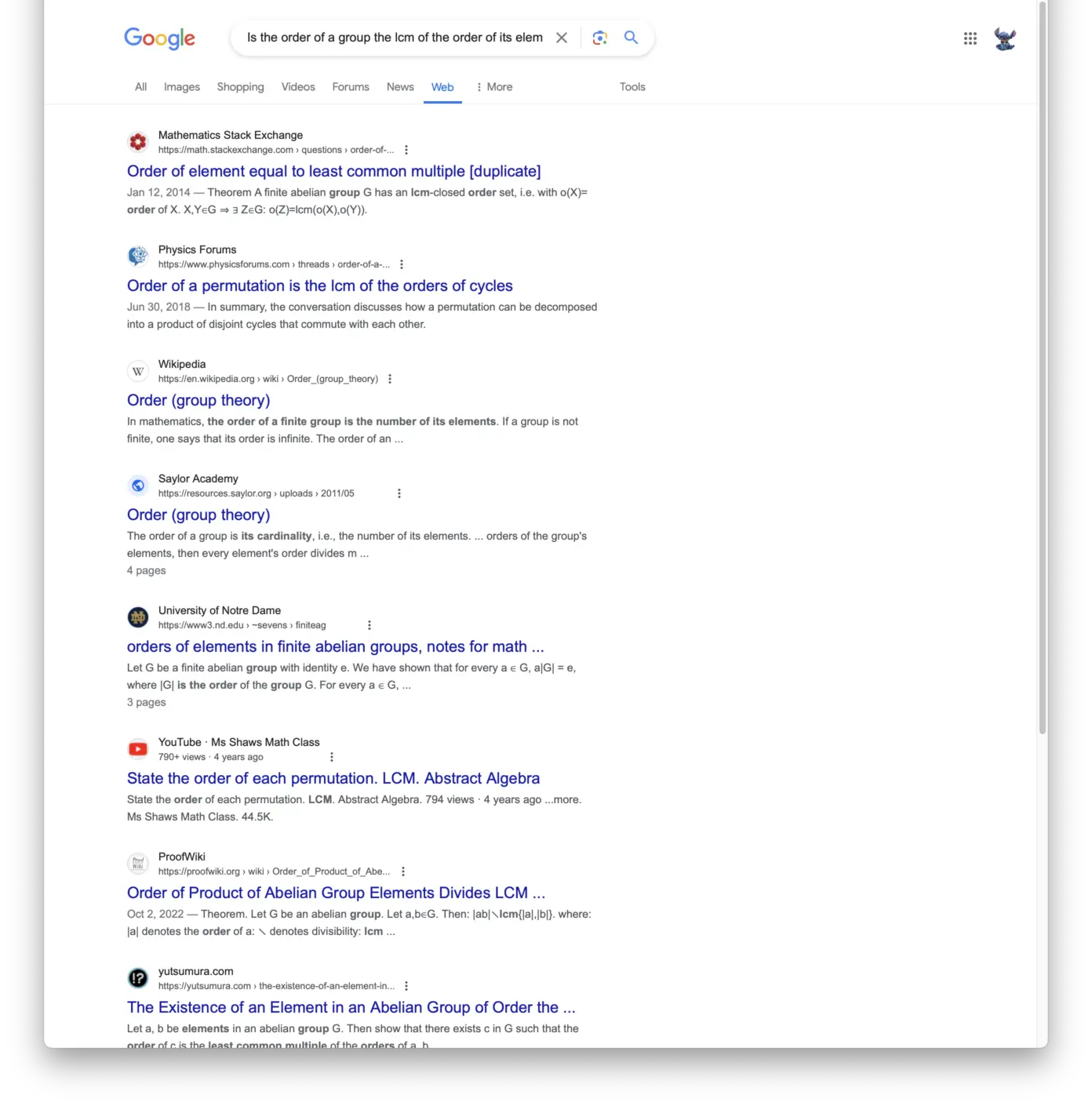

DuckDuckGo

Most of the results are unhelpful (and the StackExchange question highlighted on the right is basically gibberish because it’s showing the raw markup of a bunch of maths). Two thirds of the way down the screen, it finally links to the Saylor Academy PDF with an excerpt that answers the question (followed by a link to the relevant Wikipedia article, likewise with the key excerpt inline).

It wastes a whole bunch of space with links to YouTube videos, including thumbnails and GUI chrome.

So, mediocre at best. And from the sounds of it markedly worse than a year ago, when Rob tested it.

By default, the first screen doesn’t answer the question at all. In fact, most of the screen is wasted with:

- A “Questions & answers” section pulling from StackExchange and QuestionAI, with excerpts that are too short to actually convey their substance.

- A “People also ask” section, that does nothing to answer the question.

There’s only two actual search results on the screen, neither of which answers the question.

So, a plain bad result. Same as a year ago.

When I switch to “Web” mode, the results improve to merely mediocre – the third and fourth results are relevant (it’s the Saylor Academy & Wikipedia again), but the rest are not.

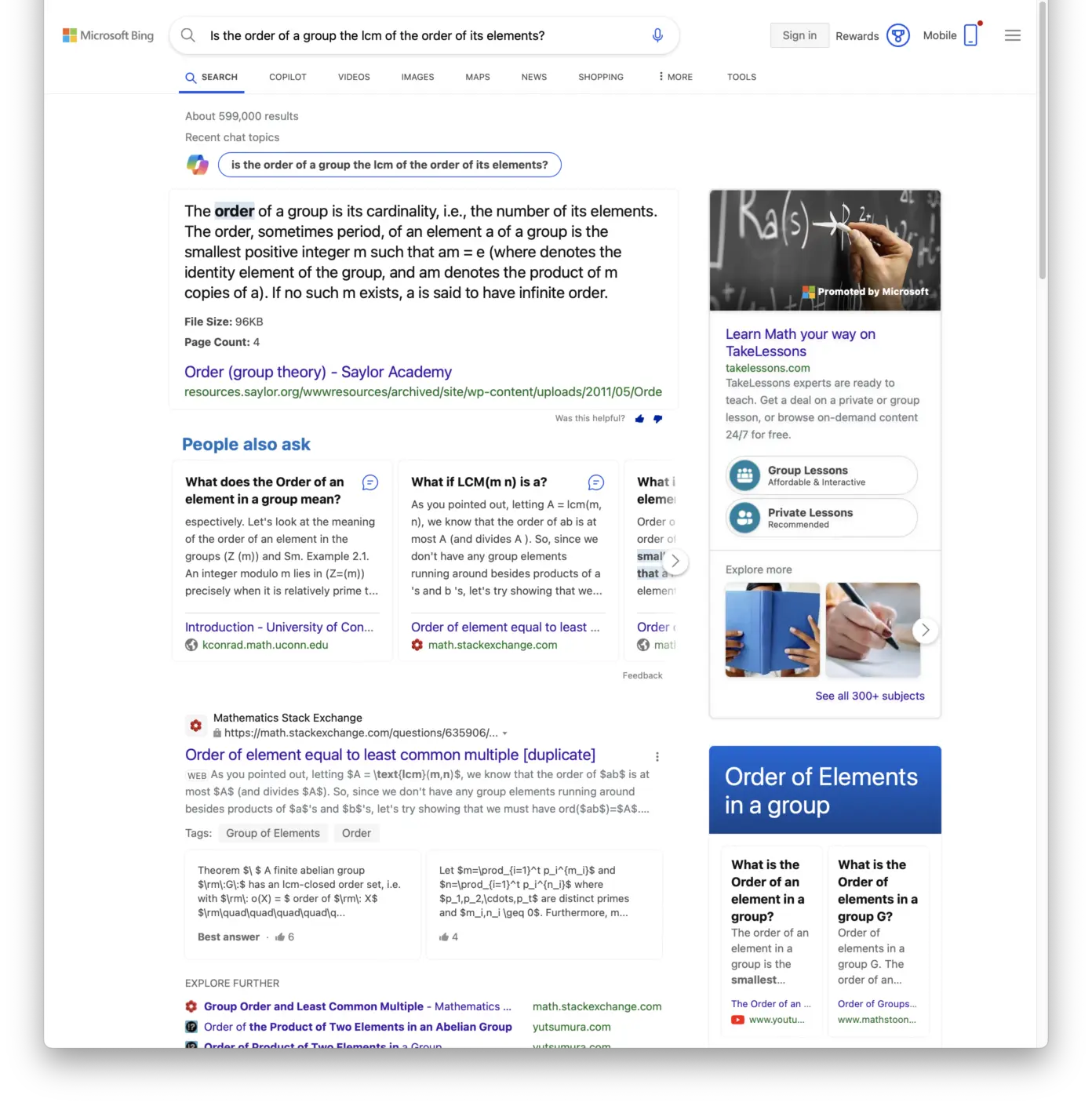

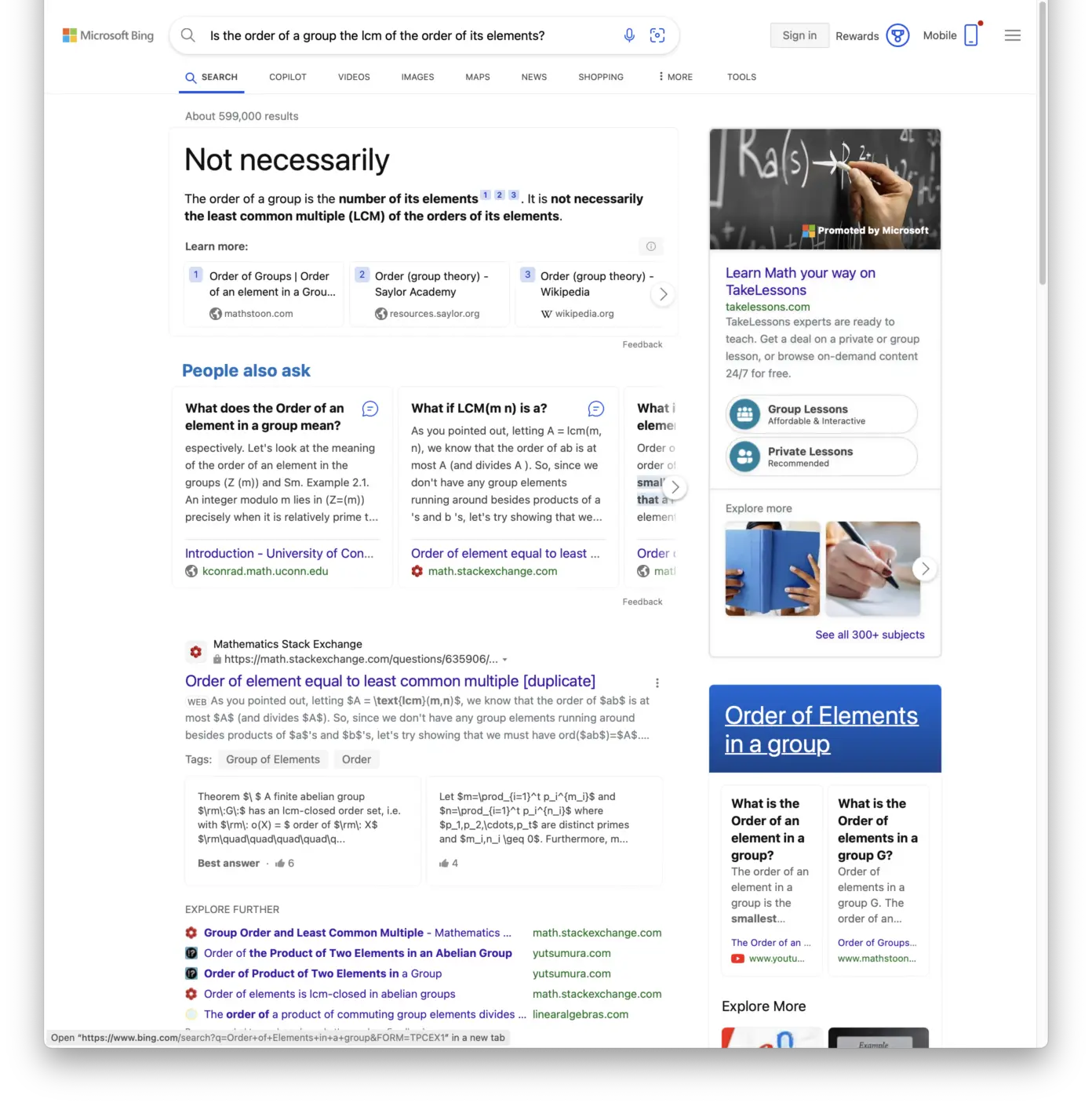

Bing

By default – if you’re not signed in and haven’t clicked through the Bing Copilot EULA – the results are decent. The very first substantial thing is a highlighted result from the Saylor Academy, with a relevant excerpt. That’s great. But the rest of the screen is rubbish – the remaining results are irrelevant, and the screen is visually very noisy – in particular, the right-hand bar includes a whole bunch of GUI chrome and images, and adds absolutely no value here.

If you click through the Copilot EULA and try again, the results improve substantially. Now you get the most accurate answer of all the results in big, bold text right up front – “Not necessarily”. It then elaborates slightly by defining what the order actually is. It also includes three citations, all of which are relevant and helpful.

The rest of the screen is garbage, though. And still very visually noisy and distracting.

So what I’d tentatively call a good result – it’d be a great result if it did away with the irrelevant junk.

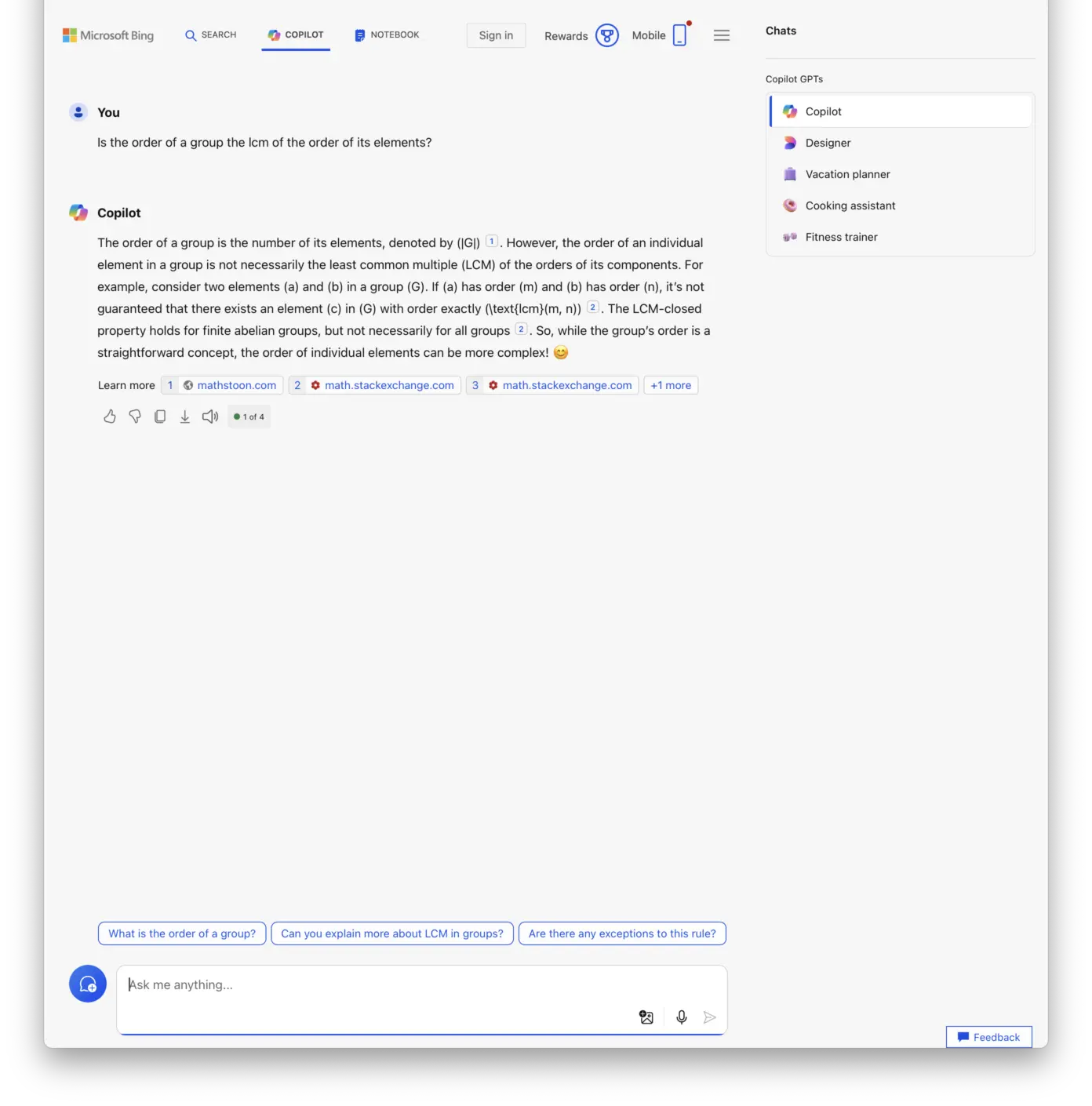

Bing Copilot

You can talk directly to Copilot if you wish, with a standard “chat” interface as popularised by ChatGPT.

The results are a bit varied.

Sometimes you get a decent, properly-cited response (ignoring minor errors such as about how group order is denoted symbolically). Though, the result reads pretty similar to Wikipedia’s article – to my eyes – yet Wikipedia isn’t cited at all (which is both borderline dishonest and also unhelpful, since the Wikipedia article is a much better source than StackExchange).

So, a decent result although not as good as what you get with simple Bing Search (with or without Copilot helping there).

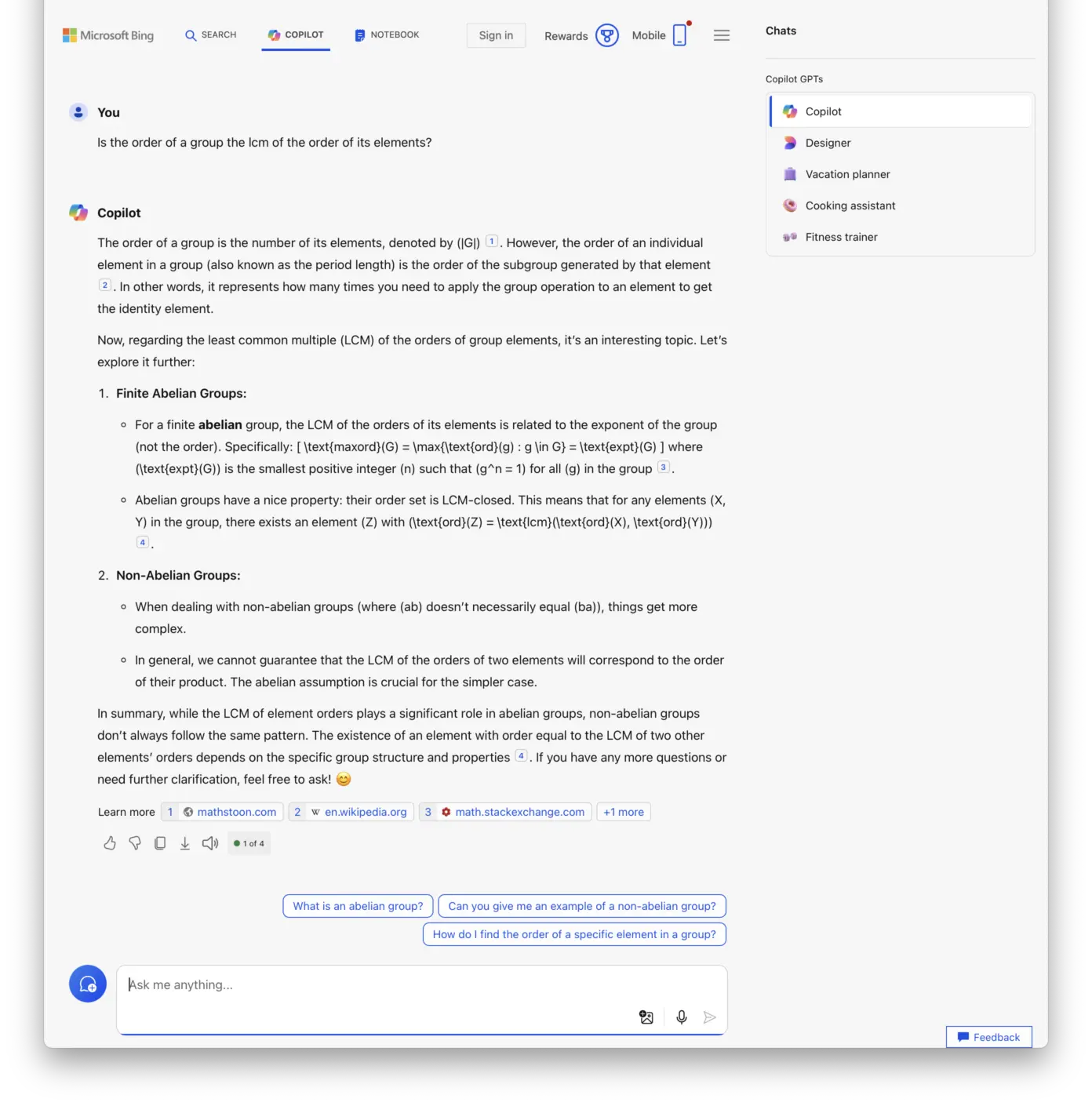

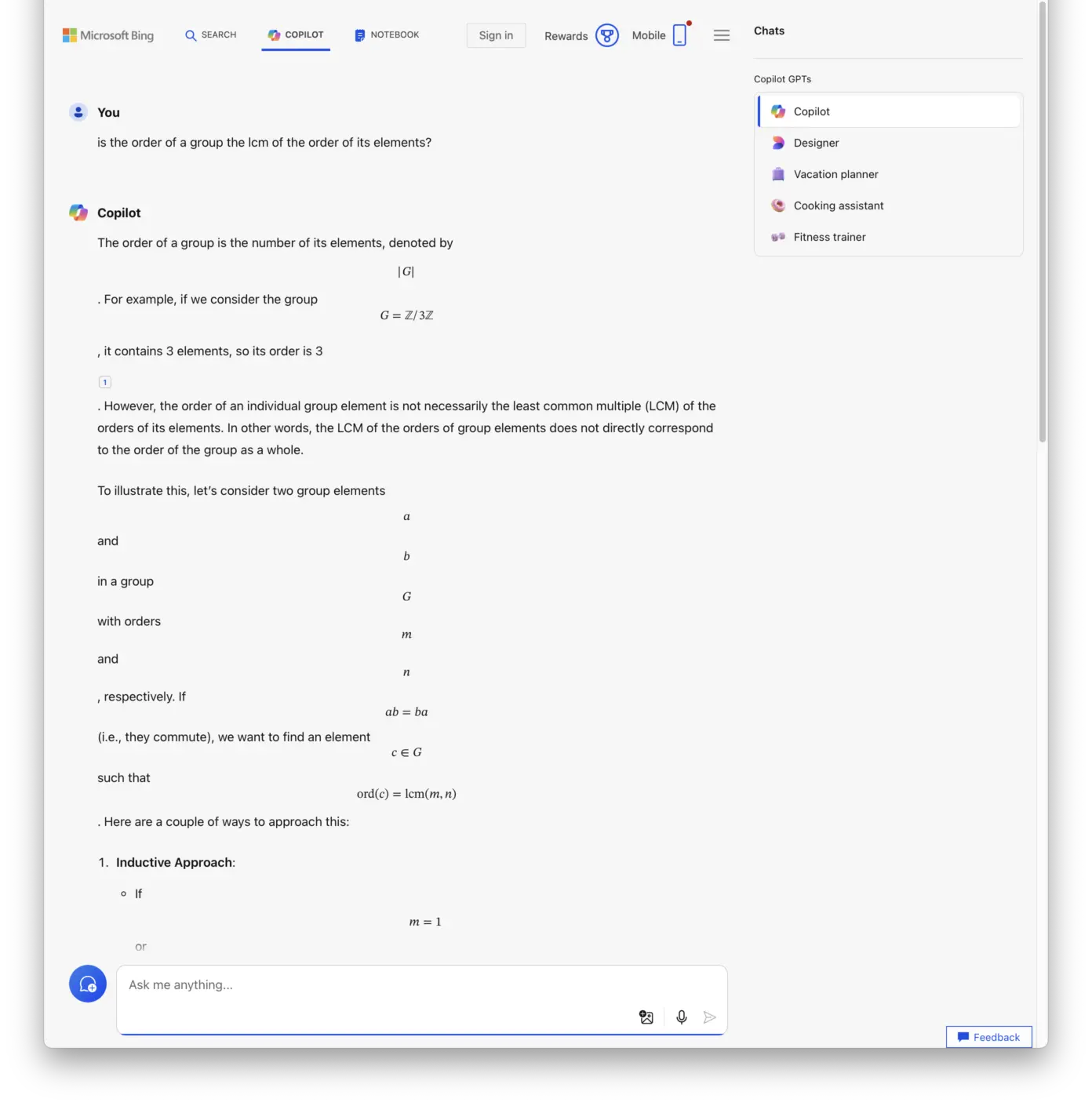

But, sometimes you catch Copilot in an overly chatty mood, and the result – while still starting out good – tends to be verbose and tangent-heavy. Apparently Copilot thinks the tangent is “an interesting topic”, but I don’t because it’s not relevant to what was asked. Note also how it fails to render the mathematical notation, instead just displaying Latex markup which renders it into gibberish.

Oddly, at other times it does render mathematical notation correctly. Well, somewhat – the unnecessary line breaks are a dubious formatting choice.

In contrast to all this unpredictability, the Bing Search results were basically completely stable. And they’re better anyway. So a tolerable but uninspiring result from Copilot.

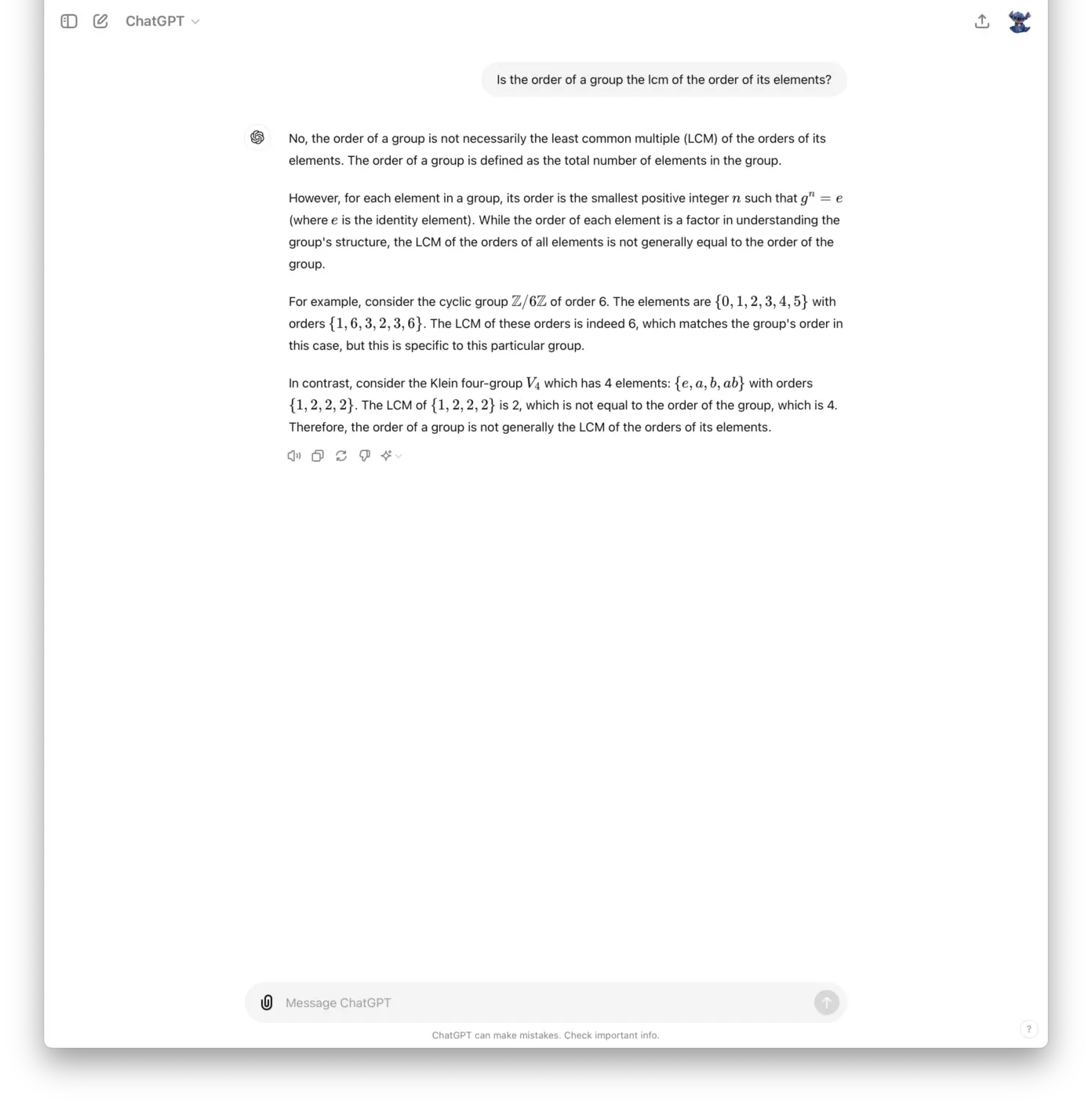

ChatGPT

A decent result in terms of answering the question, but there’s not a single citation. When I prompted it for citations it pointed me towards three algebra textbooks, all of which are actually real books and probably are technically relevant. But it doesn’t even cite a specific chapter, let-alone page. A clearly inferior result to Kagi & Bing Copilot.

Interestingly, the text of the answer is very similar to what Rob got a year ago (which was presumably with ChatGPT 3.5), at least in its first couple of sentences. I suppose I shouldn’t be surprised the answer is similar – since it’s merely remained correct and pretty helpful – but apparently I expected it to be much more unpredictable in its quality and content over time.

As far as I can tell – not knowing much about the mathematical notation in play here, or formal group theory in general – its answer is correct this time, without comically bogus examples like Rob saw. It’s nice that it [continues to] provide specific examples of where the LCM of the elements’ orders is and isn’t the group order.

Note that I’m using the free version of ChatGPT (version 4o, at time of writing).

- At least, so says Wikipedia. Copilot itself categorically denies it’s based in any way on ChatGPT or OpenAI’s work, which seems pretty obviously a blatant lie. Unless it’s not just Wikipedia but CNet that’s lying, or, you know, Microsoft themselves. ↩︎

- Admittedly I am getting rather tired of its bugginess on iOS, whereby it’ll randomly stop working; I’ll enter my search query into the Safari address bar, and be greeted with a login dialog for Kagi. Even if I log in, my search query is lost and I have to start over again (which is super infuriating for non-trivial queries given how tedious and error-prone iOS text entry is 😤).

And it makes me do this for every search.

This problem has come and gone over time, suggesting it’s some problem with the Kagi app or website. I wish they’d hurry up and just permanently fix it. ↩︎